-

摘要:

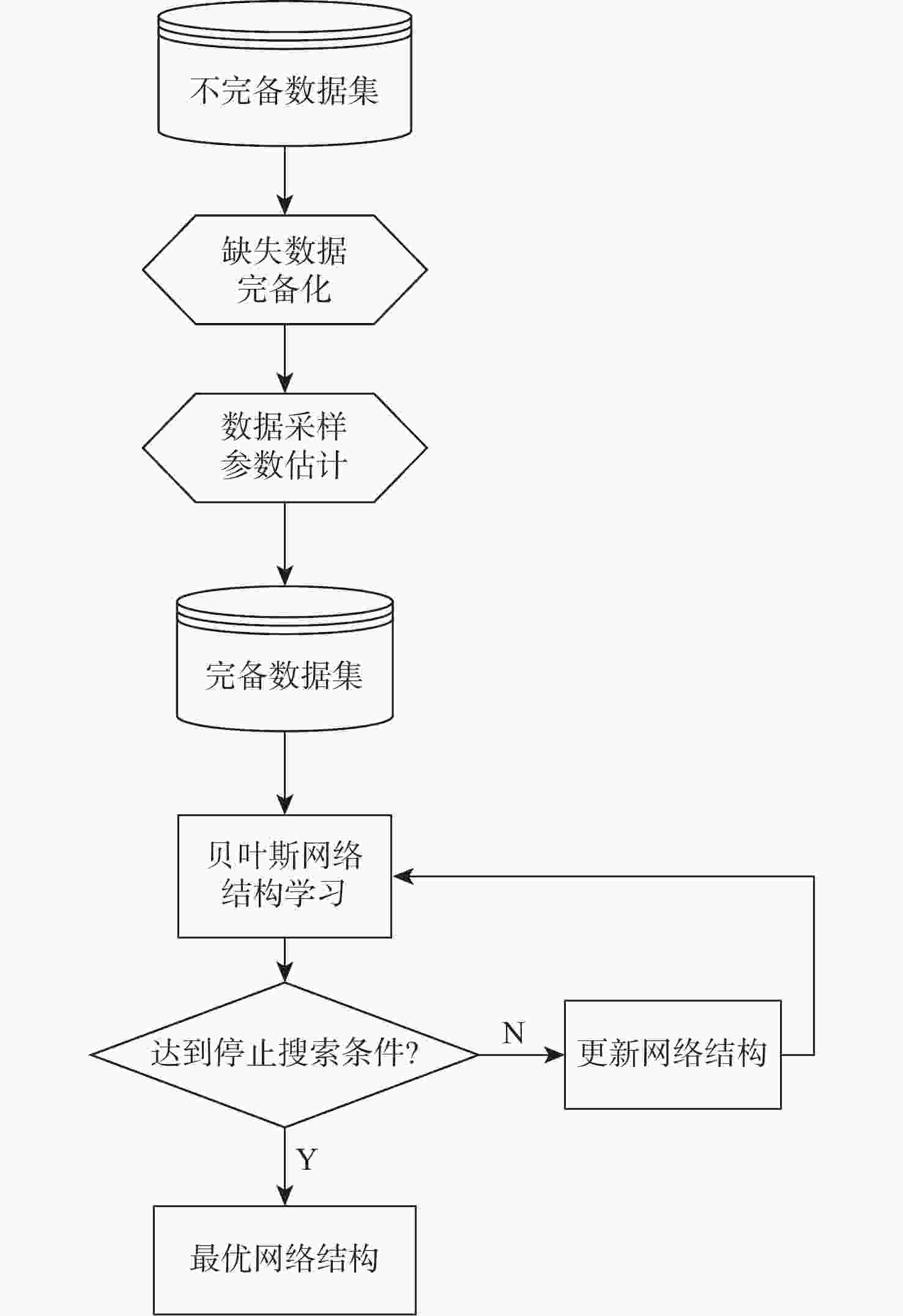

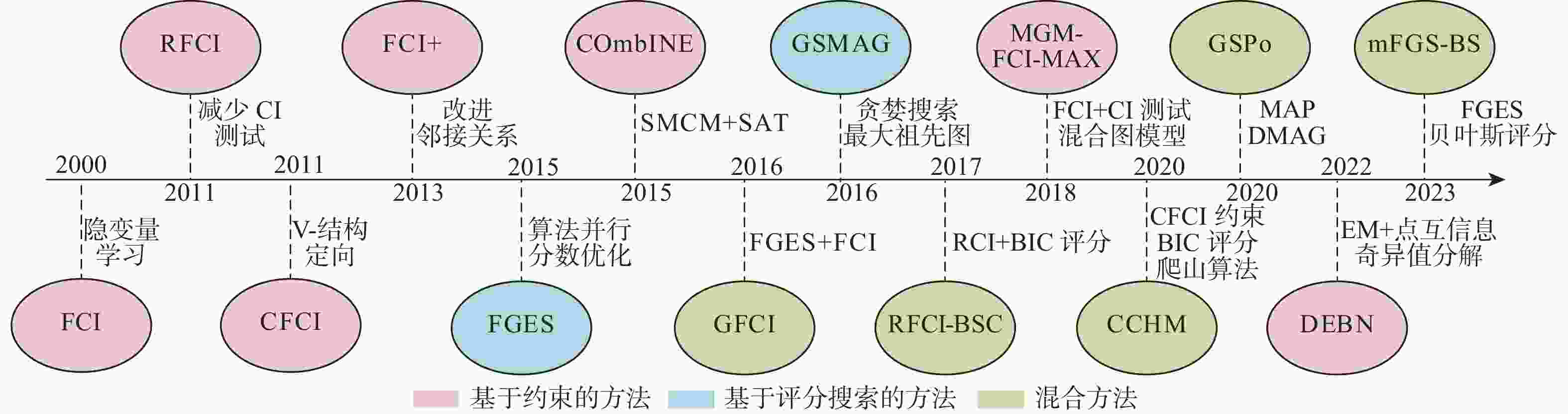

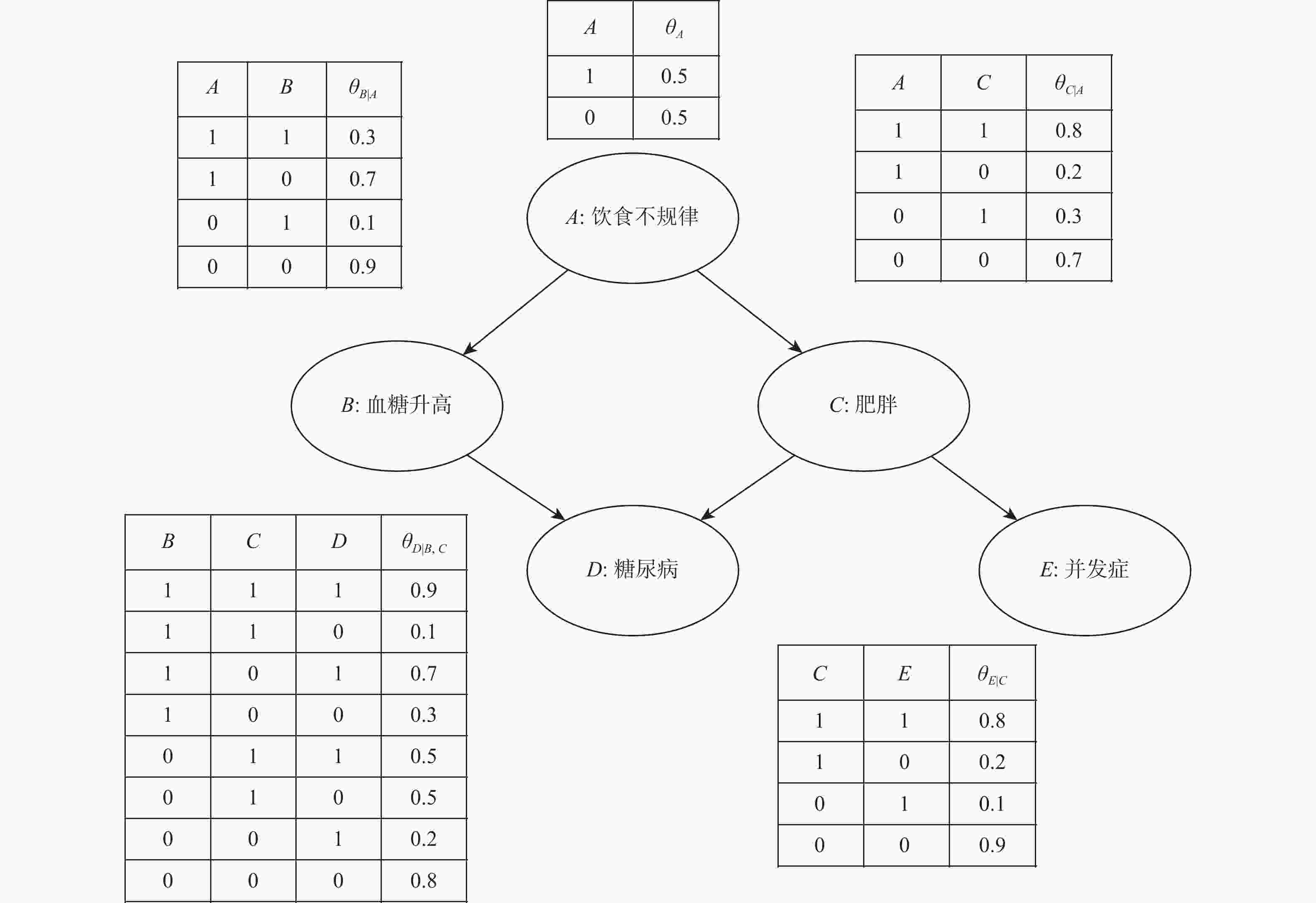

贝叶斯网络作为概率论与图论结合的工具,具备高效处理不确定性推理和数据分析的能力,被广泛应用于各领域解决复杂工程问题。此外,还可以结合先验知识和训练样本学习模型,克服了单纯依靠专家知识建立模型的局限性。基于此,回顾了贝叶斯网络的发展历程,分别从基于约束的方法、基于评分搜索的方法、混合约束和评分搜索的方法3个方面对已提出的贝叶斯网络结构学习方法进行分类归纳,并对各类方法研究的现状进行了总结分析。由于现实应用中的数据往往具有非完备性,从缺失数据处理和隐变量学习2个维度阐释了非完备贝叶斯网络结构学习的研究现状。对贝叶斯网络在不同领域中的应用情况进行阐述,并进行总结,讨论了未来贝叶斯网络结构学习方法研究的发展趋势。

Abstract:Bayesian networks, as a tool combining probability theory and graph theory, have the ability to efficiently handle uncertain reasoning and data analysis, and are widely used in various fields to solve complex engineering problems. Furthermore, the model can be learned by combining prior knowledge and training samples, overcoming the limitations of establishing the model solely relying on expert knowledge. Based on this, the development history of Bayesian networks was reviewed. The proposed Bayesian network structure learning algorithms were classified and summarized from three aspects: constraint-based methods, rating-based methods, and hybrid search algorithms respectively, and the current research status of various algorithms was summarized and analyzed. Since the data in practical applications often have incompleteness, the research status of incomplete Bayesian network structure learning is explained from two dimensions: missing data processing and latent variable learning. The application of Bayesian networks in different fields is expounded and summarized, and the development trend of future research on Bayesian network structure learning algorithms is discussed.

-

表 1 基于约束的方法的特点及应用场景

Table 1. Characteristics and application scenarios of constraint-based algorithm

时间 算法 算法特点 算法应用 1991 SGS 误差较大、效率低,具有一定的稳定性,是后续约束型算法的建立基础 只适用于完备的小型数据集 1997 PC 利用d-分离判断进行CI检测,递归删除边,算法较为准确,效率高,稳定性不如SGS算法,是经典的约束型算法 适用于完备的高阶CI测试集

(大型父节点集)2000 FCI 基于PC算法,通过验证部分祖先图中的2个邻接顶点的CI测试,进而寻找超集D-Sep(A,B)来消除隐变量带来的影响,相比于PC算法更具有普适性,是用于学习含有隐变量数据集的经典约束型算法 适用于非完备的大型数据集 2002 TPDA TPDA基于SGS算法,使用V-结构和定向相位来定向边缘,减少了所需CI测试数量的限制,相较于传统PC算法具有更高的准确性 适用于完备的大型数据集 2006 RAI CI测试方式与PC算法类似,在高阶数据集中,相较于PC算法具有更快的运算速度 适用于完备的大型数据集 2010 HPC 区别于大多基于约束的全局搜索算法,采用局部搜索方式,CI测试每次添加新变量时都执行消除步骤,能够更好地处理小样本数据集,但在高阶数据集中学习效率低 只适用于完备的小样本集 2011 RFCI 基于FCI算法,仅利用邻接阶段节点父级的条件集进行学习,并且修改了V-结构的定向规则,从而减少了高阶CI测试量,有效提高了FCI算法的运算速度 适用于非完备的大型数据集 2013 LMM 专用于解决小样本数据情况下网络结构学习正确率低的问题 只适用于完备的数据有限的

小样本集2013 FCI+ 基于FCI算法,使用邻接节点父级的条件集的同时能够有效识别祖先集,因此,可以在复杂度更高的数据集中更有效地学习稀疏因果图 适用于非完备的大型数据集 2014 PC-Stable 通过CI测试前重新计算邻接概率,降低了传统PC算法由于样本量有限而导致的错误CI测试决策的影响,在高维图中,PC-Stable算法相较于PC算法具有较高的准确性和稳定性,但运算速度有所降低 适用于完备的数据量有限的

小样本集,在大型数据集中准

确率和运算速度有所下降2014 Opt01SS 只执行零阶和一阶Cl测试,用于学习数据有限情况下小样本数据的结构 只适用于完备的小样本集 2014 OptHPC 建立在局部搜索的HPC算法的基础上,是一种有效的全局超结构恢复方法,与Opt01SS算法与HPC算法相比,OptHPC算法在大样本量下具有良好的特异性与准确性 适用于完备的大型数据集 2016 APC 继承了PC算法的高数据效率特性,动态调整CI测试顺序,节省了计算量,因此,APC算法相较于PC算法具有更快的运算速度 适用于完备的大型数据集 2018 MGM-FCI-MAX 基于回归的测试来检测不同变量类型的条件独立性,以及使用最高p值的Sepset来识别V-结构,提高了算法的精确度,另外,算法的并行化缩短了其运行时间 适用于非完备的大型数据集 2021 CTPC 包含一组适合测试CI的统计量和一个基于PC的启发式算法,能够学习连续时间的网络结构,具有较好的精确性和学习效率 适用于完备的连续时间数据集;在大型数据集中精度有所下降 2022 PC-CS 利用重采样技术与改进的CI检验,解决了复杂调查数据引入的选择偏差,PC-CS算法相较于PC-Stable算法具有更高的准确性与运算速度 适用于完备的大型数据集 表 2 基于评分函数的方法的基本表达式和特性

Table 2. Basic expressions and characteristics of scoring functions based methods

类别 评分函数 评分表达式 特性 贝叶斯评分函数 贝叶斯评分 $ \log P(G,K) = \log P(K|G) + \log P(G) $ 最优网络结构满足$ P(G|K) $后验概率分布值最大 BD $ {\mathrm{BD}}(G|K) = \displaystyle\prod\limits_{i = 1}^n {\displaystyle\prod\limits_{j = 1}^{{q_i}} {\dfrac{{\Gamma \left( {{\alpha _{ij*}}} \right)}}{{\Gamma \left( {{\alpha _{ij*}} + {m_{ij*}}} \right)}}} } \displaystyle\prod\limits_{k = 1}^{{r_i}} {\dfrac{{\Gamma \left( {{\alpha _{ijk}} + {m_{ijk}}} \right)}}{{\Gamma \left( {{\alpha _{ijk}}} \right)}}} $ 参数的先验分布符合狄利克雷分布,但精确度较低 BDeu $ {\mathrm{BDeu}}(G|K) = \log P(G)\displaystyle\prod\limits_{i = 1}^n {\displaystyle\prod\limits_{j = 1}^{{q_i}} {\dfrac{{\Gamma \left(\dfrac{\alpha }{{{q_i}}}\right)}}{{\Gamma \left(\dfrac{\alpha }{{{q_i}}} + {m_{ij}}\right)}}} } \displaystyle\prod\limits_{k = 1}^{{r_i}} {\dfrac{{\Gamma \left(\dfrac{\alpha }{{{r_i}{q_i}}} + {m_{ijk}}\right)}}{{\Gamma \left(\dfrac{\alpha }{{{r_i}{q_i}}}\right)}}} $ 评分算法假设数据D是完整的,服从均匀分布 K2 $ {\mathrm{K}}2(G|K) = \displaystyle\prod\limits_{i = 1}^n {\displaystyle\prod\limits_{j = 1}^{{q_i}} {\dfrac{{({r_i} - 1)!}}{{({N_{ij}} + {r_i} - 1)!}}} } \displaystyle\prod\limits_{k = 1}^{{r_i}} {{N_{ijk}}!} $ 利用节点序、CH评分来衡量结构的优劣性,并使用贪婪搜索得到评分最高的网络结构,是一种经典的评分函数 CH $ {\mathrm{CH}}(G|K) = \displaystyle\sum\limits_{i = 1}^n {\displaystyle\sum\limits_{j = 1}^{{q_i}} {\left[ {\log \left(\dfrac{{\Gamma \left( {{\alpha _{ij*}}} \right)}}{{\Gamma \left( {{\alpha _{ij*}} + {m_{ij*}}} \right)}}\right) + \displaystyle\sum\limits_{k = 1}^{{r_i}} {\log } \left(\dfrac{{\Gamma \left( {{\alpha _{ijk}} + {m_{ijk}}} \right)}}{{\Gamma \left( {{\alpha _{ijk}}} \right)}}\right)} \right]} } $ 结构先验均匀分布,需要提前选定超参数$ {a_{ijk}} $,当$ {a_{ijk}} = 1 $时,则CH评分转变为K2评分 信息论评分函数 BIC $ {\mathrm{BIC}}(G|K) = \displaystyle\sum\limits_{i = 1}^n {\displaystyle\sum\limits_{j = 1}^{{q_i}} {\displaystyle\sum\limits_{k = 1}^{{r_i}} {{m_{ijk}}\log {\theta _{ijk}}} } } - \dfrac{1}{2}\displaystyle\sum\limits_{i = 1}^n {{q_i}({r_i} - 1)\log m} $ 能够度量结构与数据的拟合度,具有关于模型复杂度的罚项,用于大样本数据中,是一种被广泛使用的评分函数 MDL $ {\mathrm{MDL}}(G|K) = \displaystyle\sum\limits_{i = 1}^n {\displaystyle\sum\limits_{j = 1}^{{q_i}} {\displaystyle\sum\limits_{k = 1}^{{r_i}} {{m_{ijk}}} } } \log \left( {\dfrac{{{m_{ijk}}}}{{{m_{ij}}}}} \right) - \dfrac{1}{2}C(G)\log m $ 对数据进行压缩,从而降低数据的编码长度 AIC $ {\mathrm{AIC}}(G|K) = \displaystyle\sum\limits_{i = 1}^n {\displaystyle\sum\limits_{j = 1}^{{q_i}} {\displaystyle\sum\limits_{k = 1}^{{r_i}} {{m_{ijk}}\log \left(\dfrac{{{m_{ijk}}}}{{{m_{ij }}}}\right)} } } - \displaystyle\sum\limits_{i = 1}^n {({r_i} - 1)} {q_i} $ 当实例数据服从多项分布时,对MDL进行简化而得,能够提高模型拟合度 MIT $ {\mathrm{MIT}}(G|K) = \displaystyle\sum\limits_{ i = 1, {\mathrm{p}}{{\mathrm{aG}}}\left( {{X_i}} \right) \ne \varnothing } ^n {\left( {2N \cdot {\mathrm{M}}{{\mathrm{IK}}}\left( {{X_i},{\mathrm{p}}{{\mathrm{aG}}}\left( {{X_i}} \right)} \right) - {{\max }_{{\sigma _i}}}\displaystyle\sum\limits_{j = 1}^{{q_i}} {{\chi _{\alpha ,{l_{i{\sigma _i}(j)}}}}} } \right)} $ 基于信息论和CI的评分函数,通过度量网络结构与观测数据之间的KL距离来评价网络结构的优劣 表 3 5种算法在不同噪声数据比例下的对比实验结果[48]

Table 3. Comparative experiment results of five algorithms under different noise data proportions[48]

噪声比/% 算法 得分 结构 时间/s HKS LKS AKS SSD BSD ASD SET LET AET 10 ACO-B − 14804.11 − 14810.40 − 14805.79 ±2.282 13 5.55±2.58 358 567 402.5±86.5 ABC-B − 14804.11 − 14810.40 − 14804.67 ±1.462 9 4.25±1.26 117 169 141.9±17.0 BFO-B − 14804.11 − 14813.26 − 14805.75 ±2.442 6 3.65±1.06 343 477 401.0±36.0 TS-B − 14805.81 − 14805.81 − 14805.81 ±02 2 2.00±0 238 245 240.1±2.5 HC-B − 14953.06 − 14953.06 − 14953.06 ±011 11 11.00±0 7 8 7.1±0.2 20 ACO-B − 15247.18 − 15257.07 − 15248.67 ±2.185 14 8.80±2.44 332 495 389.9±47.8 ABC-B − 15247.18 − 15256.65 − 15247.70 ±2.068 13 8.50±1.36 114 182 154.9±18.0 BFO-B − 15247.18 − 15256.92 − 15252.30 ±3.506 17 10.35±2.74 324 439 376.5±34.9 TS-B − 15293.55 − 15293.55 − 15293.55 ±020 20 20.00±0 251 262 254.7±3.1 HC-B − 15324.16 − 15324.16 − 15324.16 ±014 14 14.00±0 7 8 7.1±0.3 表 4 基于评分搜索的方法的搜索空间、评分函数及搜索策略[7,47,52-73]

Table 4. Search space, scoring function, and search strategy based on method of scoring search[7,47,52-73]

时间 算法 搜索空间 评分函数 搜索策略 1968 Chow-Liu[54] Tree Entropy 1992 K2[7] DAG K2 爬山 1995 TS[55] DAG K2 & BIC 禁忌搜索 1995 HC[56] DAG BDe 爬山 1996 GA[57] Orderings K2 遗传算法 2001 K2SN[58] Orderings K2 爬山 2002 GES[59] Equivalence BDeu 贪婪搜索 2002 ACO-B[60] DAG K2 ACO+局部搜索 2005 SGA[61] Orderings K2 遗传算法 2025 Hybrid MCMC[62] DAG BDeu MCMC 2008 ACO-K2SN[63] Orderings K2 ACO+局部搜索 2009 BN-BQPSO[64] DAG BIC 粒子群优化 2009 I-ACO-B[65] DAG K2 ACO+局部搜索 2010 IBPSO[66] DAG MDL 粒子群优化 2012 GIES[67] Equivalence BIC 贪婪搜索 2013 PSO-K2[68] Orderings K2 粒子群优化 2017 FGES[69] Equivalence BIC 贪婪搜索 2016 BFO-B[47] DAG K2 细菌觅食优化 2017 Partition MCMC[70] DAG BIC MCMC 2020 CIGAR[71] Orderings BDe 遗传算法 2020 K2-I[72] DAG BIC 爬山 2023 FGES-M[73] Equivalence BIC 贪婪搜索 2022 MAHC[53] DAG BIC 爬山 2022 DFA-B[52] DAG K2 萤火虫优化算法 年份 疾病情况 贝叶斯应用实例 文献引用 2017 中枢神经损伤 使用智能分类器算法预测脊髓损伤患者的疼痛类型 [124] 2018 慢性疾病(糖尿病、关节炎等) 使用DBN的逐步隐变量方法预测慢性疾病并发症 [125] 2015 癌症 深度信念网络用于乳腺癌的预测与诊断 [126] 2017 癌症 使用贝叶斯网络和支持向量机进行乳腺癌手术后生存概率预测 [127] 2016 癌症 使用贝叶斯网络建立乳房病变模型图预测良性肿瘤癌变概率 [128] 2015 心脏疾病 通过电子健康记录数据建立贝叶斯网络模型预测心血管病风险 [129] 2017 心脏疾病 高维数据集中使用贝叶斯网络建立因高血压引起的并发症模型 [130] 2018 传染病 使用贝叶斯网络建立登革热感染诊断模型 [131] 表 6 贝叶斯网络在故障诊断、风险评估等工业应用[135-139]

Table 6. Industrial applications of Bayesian networks in fault diagnosis and risk assessment[135-139]

年份 故障类型 概述 文献引用 2015 智能故障诊断 提出了一种两级流程操作故障诊断技术,利用贝叶斯网络分析当存在未被监控的流程变量时,无法准确定位故障原因的限制条件 [135] 2016 智能故障诊断 通过结合历史和在线信息,开发了一种贝叶斯故障诊断系统,以解决异步测量问题,可用于事件树分析 [136] 2018 工业故障诊断 通过故障树和事件序列图(FT-ESD)分析,建立了系统的紧急断开故障和后果模型图,将FT-ESD模型映射到贝叶斯网络中,提出了一种利用贝叶斯网络进行紧急断开操作故障概率分析的方法 [137] 2018 工业故障诊断 提出了一种冷冻机组故障诊断方法DR-BN,并将该方法用于评价特征选取结果 [138] 2016 动态风险评估 提出了一种基于贝叶斯网络对液化天然气运输船卸载过程动态风险评估的新方法,能够识别卸载操作中的危险事件,并对关键事件进行失效分析建模 [139] -

[1] LEE S J, SIAU K. A review of data mining techniques[J]. Industrial Management & Data Systems, 2001, 101(1): 41-46. [2] HECKERMAN D, MAMDANI A, WELLMAN M P. Real-world applications of Bayesian networks[J]. Communications of the ACM, 1995, 38(3): 24-26. doi: 10.1145/203330.203334 [3] SPIRTES P, GLYMOUR C, SCHEINES R. Reply to Humphreys and Freedman’s review of causation, prediction, and search[J]. The British Journal for the Philosophy of Science, 1997, 48(4): 555-568. doi: 10.1093/bjps/48.4.555 [4] COLOMBO D, MAATHUIS M H. Order-independent constraint-based causal structure learning[EB/OL]. (2013-09-27)[2023-07-01]. http://arxiv.org/abs/1211.3295v2. [5] LIU J X, TIAN Z L. Verification of three-phase dependency analysis Bayesian network learning method for maize carotenoid gene mining[J]. BioMed Research International, 2017, 2017: 1813494. [6] MASEGOSA A R, MORAL S. An interactive approach for Bayesian network learning using domain/expert knowledge[J]. International Journal of Approximate Reasoning, 2013, 54(8): 1168-1181. doi: 10.1016/j.ijar.2013.03.009 [7] COOPER G F, HERSKOVITS E. A Bayesian method for the induction of probabilistic networks from data[J]. Machine Learning, 1992, 9(4): 309-347. [8] NEATH A A, CAVANAUGH J E. The Bayesian information criterion: background, derivation, and applications[J]. Wiley Interdisciplinary Reviews: Computational Statistics, 2012, 4(2): 199-203. doi: 10.1002/wics.199 [9] LIU H, CAO Y H. Study of heuristic search and exhaustive search in search algorithms of the structural learning[C]//Proceedings of the 2nd International Conference on Multimedia and Information Technology. Piscataway: IEEE Press, 2010: 169-171. [10] CHAKRABORTY A, KAR A K. Swarm intelligence: a review of algorithms[M]//PATNAIK S, YANG X S, NAKAMATSU K. Nature-inspired computing and optimization. Berlin: Springer, 2017: 475-494. [11] GEFFNER H, DECHTER R, HALPERN J Y. Probabilistic and causal inference: the works of Judea Pearl[M]. New York: ACM, 2022. [12] PEARL J. Causality: models, reasoning and inference[M]. Cambridge: Cambridge University Press, 2000. [13] DAGUM P, GALPER A. Additive belief-network models[C]//Proceedings of the Uncertainty in Artificial Intelligence. Amsterdam: Elsevier, 1993: 91-98. [14] BATCHELOR C, CAIN J. Application of belief networks to water management studies[J]. Agricultural Water Management, 1999, 40(1): 51-57. doi: 10.1016/S0378-3774(98)00103-6 [15] TICEHURST J L, NEWHAM L T H, RISSIK D, et al. A Bayesian network approach for assessing the sustainability of coastal lakes in New South Wales, Australia[J]. Environmental Modelling & Software, 2007, 22(8): 1129-1139. [16] SPIRTES P, GLYMOUR C, SCHEINES R. From probability to causality[J]. Philosophical Studies: An International Journal for Philosophy in the Analytic Tradition, 1991, 64(1): 1-36. doi: 10.1007/BF00356088 [17] GEIGER D, VERMA T, PEARL J. D-separation: from theorems to algorithms[M]//HENRION M, SHACHTER R D, KANAL L N, et al. Uncertainty in artificial intelligence. Amsterdam: Elsevier, 1990: 139-148. [18] CHENG J, GREINER R, KELLY J, et al. Learning Bayesian networks from data: an information-theory based approach[J]. Artificial Intelligence, 2002, 137(1-2): 43-90. doi: 10.1016/S0004-3702(02)00191-1 [19] YEHEZKEL R, LERNER B. Bayesian network structure learning by recursive autonomy identification[C]//Proceedings of the Structural, Syntactic, and Statistical Pattern Recognition. Berlin: Springer, 2006: 154-162. [20] DE MORAIS S R, AUSSEM A. An efficient and scalable algorithm for local Bayesian network structure discovery[C]//Proceedings of the Machine Learning and Knowledge Discovery in Databases. Berlin: Springer, 2010: 164-179. [21] MAHDI R, MEZEY J. Sub-local constraint-based learning of Bayesian networks using a joint dependence criterion[J]. Journal of Machine Learning Research, 2013, 14(1): 1563-1603. [22] VILLANUEVA E, MACIEL C D. Efficient methods for learning Bayesian network super-structures[J]. Neurocomputing, 2014, 123: 3-12. doi: 10.1016/j.neucom.2012.10.035 [23] MINN S, 傅顺开. 贝叶斯网络结构加速学习算法[J]. 计算机科学, 2016, 43(2): 263-268. doi: 10.11896/j.issn.1002-137X.2016.02.055MINN S, FU S K. Accelerating structure learning of Bayesian network[J]. Computer Science, 2016, 43(2): 263-268(in Chinese). doi: 10.11896/j.issn.1002-137X.2016.02.055 [24] JIANG Y L, LIANG Z Z, GAO H, et al. An improved constraint-based Bayesian network learning method using Gaussian kernel probability density estimator[J]. Expert Systems with Applications, 2018, 113: 544-554. doi: 10.1016/j.eswa.2018.06.058 [25] BREGOLI A, SCUTARI M, STELLA F. A constraint-based algorithm for the structural learning of continuous-time Bayesian networks[J]. International Journal of Approximate Reasoning, 2021, 138: 105-122. doi: 10.1016/j.ijar.2021.08.005 [26] MARELLA D, VICARD P. Bayesian network structural learning from complex survey data: a resampling based approach[J]. Statistical Methods & Applications, 2022, 31(4): 981-1013. [27] ZGUROVSKII M Z, BIDYUK P I, TERENT’EV A N. Methods of constructing Bayesian networks based on scoring functions[J]. Cybernetics and Systems Analysis, 2008, 44(2): 219-224. doi: 10.1007/s10559-008-0021-x [28] SIMONS K T, KOOPERBERG C, HUANG E, et al. Assembly of protein tertiary structures from fragments with similar local sequences using simulated annealing and Bayesian scoring functions[J]. Journal of Molecular Biology, 1997, 268(1): 209-225. doi: 10.1006/jmbi.1997.0959 [29] YANG S L, CHANG K C. Comparison of score metrics for Bayesian network learning[J]. IEEE Transactions on Systems, Man, and Cybernetics-Part A: Systems and Humans, 2002, 32(3): 419-428. doi: 10.1109/TSMCA.2002.803772 [30] SCUTARI M. An empirical-Bayes score for discrete Bayesian networks[C]//Proceedings of the Conference on Probabilistic Graphical Models. [S. l. ]: PMLR, 2016: 438-448. [31] DE CAMPOS L M, FRIEDMAN N. A scoring function for learning Bayesian networks based on mutual information and conditional independence tests[J]. Journal of Machine Learning Research, 2006, 7(10): 2149-2187. [32] DE CAMPOS L M, FERNÁNDEZ-LUNA J M, GÁMEZ J A, et al. Ant colony optimization for learning Bayesian networks[J]. International Journal of Approximate Reasoning, 2002, 31(3): 291-311. doi: 10.1016/S0888-613X(02)00091-9 [33] VAN LAARHOVEN P J M, AARTS E H L. Simulated annealing [M]//VAN LAARHOVEN P J M, AARTS E H L. Simulated annealing: theory and applications. Berlin: Springer, 1987: 7-15. [34] BOUCKAERT R R. Properties of Bayesian belief network learning algorithms[C]//Proceedings of the 10th Annual Conference on Uncertainty in Artificial Intelligence . Amsterdam: Elsevier, 1994: 102-109. [35] 李晓晴, 于海征. 贝叶斯网络结构学习的双重K2算法[J]. 科学技术与工程, 2022, 22(24): 10602-10610. doi: 10.3969/j.issn.1671-1815.2022.24.031LI X Q, YU H Z. Double K2 algorithm for Bayesian network structure learning[J]. Science Technology and Engineering, 2022, 22(24): 10602-10610(in Chinese). doi: 10.3969/j.issn.1671-1815.2022.24.031 [36] SELMAN B, GOMES C P. Hill-climbing search[J]. Encyclopedia of Cognitive Science, 2006, 81: 82. [37] GLOVER F, LAGUNA M. Tabu search[M]//DU D Z, PARDALOS P M. Handbook of combinatorial optimization. Berlin: Springer, 1998: 2093-229. [38] 刘大有, 王飞, 卢奕南, 等. 基于遗传算法的Bayesian网结构学习研究[J]. 计算机研究与发展, 2001, 38(8): 916-922.LIU D Y, WANG F, LU Y N, et al. Research on learning Bayesian network structure based on genetic algorithms[J]. Journal of Computer Research and Development, 2001, 38(8): 916-922(in Chinese). [39] CUI G, WONG M L, LUI H K. Machine learning for direct marketing response models: Bayesian networks with evolutionary programming[J]. Management Science, 2006, 52(4): 597-612. doi: 10.1287/mnsc.1060.0514 [40] GÁMEZ J A, PUERTA J M. Searching for the best elimination sequence in Bayesian networks by using ant colony optimization[J]. Pattern Recognition Letters, 2002, 23(1-3): 261-277. doi: 10.1016/S0167-8655(01)00123-4 [41] GHEISARI S, MEYBODI M R. BNC-PSO: structure learning of Bayesian networks by particle swarm optimization[J]. Information Sciences, 2016, 348: 272-289. doi: 10.1016/j.ins.2016.01.090 [42] SAHIN F, DEVASIA A. Distributed particle swarm optimization for structural Bayesian network learning[M]//CHAN F, TIWARI M. Swarm intelligence, focus on ant and particle swarm optimization. London: IntechOpen Limited, 2007: 505-532. [43] ASKARI M B A, AHSAEE M G. Bayesian network structure learning based on cuckoo search algorithm[C]//Proceedings of the 6th Iranian Joint Congress on Fuzzy and Intelligent Systems. Piscataway: IEEE Press, 2018: 127-130. [44] KAREEM S W, OKUR M C. Evaluation of Bayesian network structure learning using elephant swarm water search algorithm [M]//SHI C. Handbook of research on advancements of swarm intelligence algorithms for solving real-world problems. Hershey: Engineering Science Reference, 2020: 139-159. [45] WANG J Y, LIU S Y. Novel binary encoding water cycle algorithm for solving Bayesian network structures learning problem[J]. Knowledge-Based Systems, 2018, 150: 95-110. doi: 10.1016/j.knosys.2018.03.007 [46] JI J Z, WEI H K, LIU C N. An artificial bee colony algorithm for learning Bayesian networks[J]. Soft Computing, 2013, 17(6): 983-994. doi: 10.1007/s00500-012-0966-6 [47] YANG C C, JI J Z, LIU J M, et al. Structural learning of Bayesian networks by bacterial foraging optimization[J]. International Journal of Approximate Reasoning, 2016, 69: 147-167. doi: 10.1016/j.ijar.2015.11.003 [48] JI J Z, YANG C C, LIU J M, et al. A comparative study on swarm intelligence for structure learning of Bayesian networks[J]. Soft Computing, 2017, 21(22): 6713-6738. doi: 10.1007/s00500-016-2223-x [49] 张亮, 章兢. 改进遗传优化的贝叶斯网络结构学习[J]. 计算机系统应用, 2011, 20(9): 68-72. doi: 10.3969/j.issn.1003-3254.2011.09.015ZHANG L, ZHANG J. Structure learning of BN based on improved genetic algorithm[J]. Computer Systems & Applications, 2011, 20(9): 68-72(in Chinese). doi: 10.3969/j.issn.1003-3254.2011.09.015 [50] 金焱, 胡云安, 张瑾, 等. K2与模拟退火相结合的贝叶斯网络结构学习[J]. 东南大学学报(自然科学版), 2012, 42(增刊1): 82-86.JIN Y, HU Y A, ZHANG J, et al. Bayesian network structure learning based on K2 and simulated annealing[J]. Journal of Southeast University (Natural Science Edition), 2012, 42(Sup 1): 82-86(in Chinese). [51] 潘成胜, 张斌, 吕亚娜, 等. 改进灰狼优化算法的K-Means文本聚类[J]. 计算机工程与应用, 2021, 57(1): 188-193. doi: 10.3778/j.issn.1002-8331.2004-0016PAN C S, ZHANG B, LYU Y N, et al. K-Means text clustering based on improved gray wolf optimization algorithm[J]. Computer Engineering and Applications, 2021, 57(1): 188-193(in Chinese). doi: 10.3778/j.issn.1002-8331.2004-0016 [52] WANG X C, REN H J, GUO X X. A novel discrete firefly algorithm for Bayesian network structure learning[J]. Knowledge-Based Systems, 2022, 242: 108426. doi: 10.1016/j.knosys.2022.108426 [53] CONSTANTINOU A C, LIU Y, KITSON N K, et al. Effective and efficient structure learning with pruning and model averaging strategies[J]. International Journal of Approximate Reasoning, 2022, 151: 292-321. doi: 10.1016/j.ijar.2022.09.016 [54] CHOW C, LIU C. Approximating discrete probability distributions with dependence trees[J]. IEEE Transactions on Information Theory, 1968, 14(3): 462-467. doi: 10.1109/TIT.1968.1054142 [55] BOUCKAERT R R. Bayesian belief networks: from construction to inference[D]. Utrecht: Utrecht University, 1995. [56] HECKERMAN D, GEIGER D, CHICKERING D M. Learning Bayesian networks: the combination of knowledge and statistical data[C]//Proceedings of the 10th Conference on Uncertainty in Artificial Intelligence. Amsterdam: Elsevier, 1994: 293-301. [57] LARRANAGA P, KUIJPERS C M H, MURGA R H, et al. Learning Bayesian network structures by searching for the best ordering with genetic algorithms[J]. IEEE Transactions on Systems, Man, and Cybernetics-Part A: Systems and Humans, 1996, 26(4): 487-493. doi: 10.1109/3468.508827 [58] DE CAMPOS L M, PUERTA J M. Stochastic local and distributed search algorithms for learning belief networks[C]//Proceedings of the Ⅲ International Symposium on Adaptive Systems: Evolutionary Computation and Probabilistic Graphical Model. Berlin: Springer, 2001: 109-115. [59] CHICKERING D M. Optimal structure identification with greedy search[J]. Journal of Machine Learning Research, 2002, 3: 507-554. [60] DE CAMPOS L M, GÁMEZ J A, PUERTA J M. Learning Bayesian networks by ant colony optimisation: searching in two different spaces[J]. Mathware & Soft Computing, 2002, 9: 2-3. [61] SHETTY S, SONG M. Structure learning of Bayesian networks using a semantic genetic algorithm-based approach[C]//Proceedings of the 3rd International Conference on Information Technology: Research and Education. Piscataway: IEEE Press, 2005: 454-458. [62] CHAN L S H, CHU A M Y, SO M K P. A hybrid Markov chain Monte Carlo approach for structural learning in Bayesian networks based on variable blocking[EB/OL]. [2023-07-01]. http://doi.org/10.1214/25-BA1521. [63] SALEHPOUR A A, MIRMOBIN B, AFZALI-KUSHA A, et al. An energy efficient routing protocol for cluster-based wireless sensor networks using ant colony optimization[C]//Proceedings of the International Conference on Innovations in Information Technology. Piscataway: IEEE Press, 2008: 455-459. [64] ZHAO J, SUN J, XU W B, et al. Structure learning of Bayesian networks based on discrete binary quantum-behaved particle swarm optimization algorithm[C]//Proceedings of the 5th International Conference on Natural Computation. Piscataway: IEEE Press, 2009: 86-90. [65] JI J Z, ZHANG H X, HU R B, et al. A Bayesian network learning algorithm based on independence test and ant colony optimization[J]. Acta Automatica Sinica, 2009, 35(3): 281-288. [66] LI X L. A particle swarm optimization and immune theory-based algorithm for structure learning of Bayesian networks[J]. International Journal of Database Theory and Application, 2010, 3(2): 61-69. [67] HAUSER A, BÜHLMANN P. Characterization and greedy learning of interventional Markov equivalence classes of directed acyclic graphs[J]. Journal of Machine Learning Research, 2012, 13(1): 2409-2464. [68] AOUAY S, JAMOUSSI S, AYED Y B. Particle swarm optimization based method for Bayesian network structure learning[C]//Proceedings of the 5th International Conference on Modeling, Simulation and Applied Optimization. Piscataway: IEEE Press, 2013: 1-6. [69] RAMSEY J, GLYMOUR M, SANCHEZ-ROMERO R, et al. A million variables and more: the fast greedy equivalence search algorithm for learning high-dimensional graphical causal models, with an application to functional magnetic resonance images[J]. International Journal of Data Science and Analytics, 2017, 3(2): 121-129. doi: 10.1007/s41060-016-0032-z [70] SCANAGATTA M, CORANI G, ZAFFALON M. Improved local search in Bayesian networks structure learning[C]//Proceedings of the Advanced Methodologies for Bayesian Networks. [S. l. ]: PMLR, 2017: 45-56. [71] JOSE S, LOUIS S J, DASCALU S M, et al. Bayesian network structure learning using case-injected genetic algorithms[C]//Proceedings of the IEEE 32nd International Conference on Tools with Artificial Intelligence. Piscataway: IEEE Press, 2020: 572-579. [72] BEHJATI S, BEIGY H. Improved K2 algorithm for Bayesian network structure learning[J]. Engineering Applications of Artificial Intelligence, 2020, 91: 103617. doi: 10.1016/j.engappai.2020.103617 [73] BERNAOLA N, MICHIELS M, LARRAÑAGA P, et al. Learning massive interpretable gene regulatory networks of the human brain by merging Bayesian networks[J]. PLoS Computational Biology, 2023, 19(12): e1011443. doi: 10.1371/journal.pcbi.1011443 [74] SINGH M, VALTORTA M. Construction of Bayesian network structures from data: a brief survey and an efficient algorithm[J]. International Journal of Approximate Reasoning, 1995, 12(2): 111-131. doi: 10.1016/0888-613X(94)00016-V [75] DASH D, DRUZDZEL M J. A hybrid anytime algorithm for the construction of causal models from sparse data[EB/OL]. (2013-01-23)[2023-07-01]. http://arxiv.org/abs/1301.6689. [76] DE CAMPOS L M, FERNÁNDEZ-LUNA J M, PUERTA J M. An iterated local search algorithm for learning Bayesian networks with restarts based on conditional independence tests[J]. International Journal of Intelligent Systems, 2003, 18(2): 221-235. doi: 10.1002/int.10085 [77] TSAMARDINOS I, BROWN L E, ALIFERIS C F. The max-min hill-climbing Bayesian network structure learning algorithm[J]. Machine Learning, 2006, 65(1): 31-78. doi: 10.1007/s10994-006-6889-7 [78] LIU H, ZHOU S G, LAM W, et al. A new hybrid method for learning Bayesian networks: separation and reunion[J]. Knowledge-Based Systems, 2017, 121: 185-197. doi: 10.1016/j.knosys.2017.01.029 [79] CASTELO R, ROVERATO A. A robust procedure for Gaussian graphical model search from microarray data with p larger than n[J]. Journal of Machine Learning Research, 2006, 7(12): 2621-2650. [80] NANDY P, HAUSER A, MAATHUIS M H. High-dimensional consistency in score-based and hybrid structure learning[J]. The Annals of Statistics, 2018, 46(6A): 3151-3183. [81] ZHAO J J, HO S S. Improving Bayesian network local structure learning via data-driven symmetry correction methods[J]. International Journal of Approximate Reasoning, 2019, 107: 101-121. doi: 10.1016/j.ijar.2019.02.004 [82] DAI J G, REN J, DU W C. Decomposition-based Bayesian network structure learning algorithm using local topology information[J]. Knowledge-Based Systems, 2020, 195: 105602. doi: 10.1016/j.knosys.2020.105602 [83] SUN B D, ZHOU Y, WANG J J, et al. A new PC-PSO algorithm for Bayesian network structure learning with structure priors[J]. Expert Systems with Applications, 2021, 184: 115237. doi: 10.1016/j.eswa.2021.115237 [84] 仝兆景, 李金香, 乔征瑞. 一种具有结构先验的贝叶斯网络结构学习算法 [J]. 电子科技, 2023, 36(11): 1-7.TONG Z J , LI J X, QIAO Z R. A structural learning algorithm for Bayesian networks with structural priori[J]. Electronic Science and Technology, 2023, 36(11): 1-7(in Chinese). [85] RESSEGUIER N, GIORGI R, PAOLETTI X. Sensitivity analysis when data are missing not-at-random[J]. Epidemiology, 2011, 22(2): 282. doi: 10.1097/EDE.0b013e318209dec7 [86] DEMPSTER A P, LAIRD N M, RUBIN D B. Maximum likelihood from incomplete data via the EM algorithm[J]. Journal of the Royal Statistical Society: Series B (Methodological), 1977, 39(1): 1-22. doi: 10.1111/j.2517-6161.1977.tb01600.x [87] FRIEDMAN N. The Bayesian structural EM algorithm[EB/OL]. (2013-01-30)[2023-07-01]. http://arxiv.org/abs/1301.7373. [88] FRIEDMAN N. Learning belief networks in the presence of missing values and hidden variables[C]//Proceedings of the Fourteenth International Conference on Machine Learning. New York: ACM, 1997: 125-133. [89] WONG M L, GUO Y Y. Learning Bayesian networks from incomplete databases using a novel evolutionary algorithm[J]. Decision Support Systems, 2008, 45(2): 368-383. doi: 10.1016/j.dss.2008.01.002 [90] SCANAGATTA M, CORANI G, ZAFFALON M, et al. Efficient learning of bounded-treewidth Bayesian networks from complete and incomplete data sets[J]. International Journal of Approximate Reasoning, 2018, 95: 152-166. doi: 10.1016/j.ijar.2018.02.004 [91] QIAN G Q, WU Y H, XU M. Multiple change-points detection by empirical Bayesian information criteria and Gibbs sampling induced stochastic search[J]. Applied Mathematical Modelling, 2019, 72: 202-216. doi: 10.1016/j.apm.2019.03.012 [92] GELFAND A E. Gibbs sampling[J]. Journal of the American Statistical Association, 2000, 95(452): 1300. doi: 10.1080/01621459.2000.10474335 [93] BROOKS S. Markov chain Monte Carlo method and its application[J]. Journal of the Royal Statistical Society: Series D (the Statistician), 1998, 47(1): 69-100. [94] BODEWES T, SCUTARI M. Learning Bayesian networks from incomplete data with the node-average likelihood[J]. International Journal of Approximate Reasoning, 2021, 138: 145-160. doi: 10.1016/j.ijar.2021.07.015 [95] LIU Y, CONSTANTINOU A C. Greedy structure learning from data that contain systematic missing values[J]. Machine Learning, 2022, 111(10): 3867-3896. doi: 10.1007/s10994-022-06195-8 [96] ELIDAN G, LOTNER N, FRIEDMAN N, et al. Discovering hidden variables: a structure-based approach[C]//Proceedings of the Advances in Neural Information Processing Systems. [S.l.]: NIPS, 2000. [97] ELIDAN G, FRIEDMAN N. Learning hidden variable networks: the information bottleneck approach[J]. Journal of Machine Learning Research, 2005, 6: 81-127. [98] SPIRTES P, GLYMOUR C N, SCHEINES R, et al. Causation, prediction, and search[M]. Cambridge: MIT Press, 2000. [99] COLOMBO D, MAATHUIS M H, KALISCH M, et al. Learning high-dimensional DAGs with latent and selection variables[C]//Proceedings of the 27th Conference on Uncertainty in Artificial Intelligence. [S. l. ]: DBLP, 2011: 850-850. [100] CLAASSEN T, MOOIJ J, HESKES T. Learning sparse causal models is not NP-hard[EB/OL]. (2013-09-26)[2023-07-01]. http://arxiv.org/abs/1309.6824. [101] SCHEINES R. An introduction to causal inference[EB/OL]. [2023-07-01]. http://www.academin.edu/3135379/An_introduction_to_causal_inference. [102] TRIANTAFILLOU S, TSAMARDINOS I. Constraint-based causal discovery from multiple interventions over overlapping variable sets[J]. The Journal of Machine Learning Research, 2015, 16(1): 2147-2205. [103] RAGHU V K, RAMSEY J D, MORRIS A, et al. Comparison of strategies for scalable causal discovery of latent variable models from mixed data[J]. International Journal of Data Science and Analytics, 2018, 6(1): 33-45. doi: 10.1007/s41060-018-0104-3 [104] QI Z W, YUE K, DUAN L, et al. Dynamic embeddings for efficient parameter learning of Bayesian network with multiple latent variables[J]. Information Sciences, 2022, 590: 198-216. doi: 10.1016/j.ins.2022.01.020 [105] RAMSEY J D. Scaling up greedy causal search for continuous variables[EB/OL]. (2015-10-11)[2023-07-01]. http://arxiv.org/abs/1507.07749v2. [106] TRIANTAFILLOU S, TSAMARDINOS I. Score-based vs constraint-based causal learning in the presence of confounders[C]//Proceedings of the Uncertainty in Artificial Intelligence. New York:[s.n.], 2016: 59-67. [107] DRTON M, EICHLER M, RICHARDSON T S. Computing maximum likelihood estimates in recursive linear models with correlated errors[J]. Journal of Machine Learning Research, 2009, 10: 2329-2348. [108] OGARRIO J M, SPIRTES P, RAMSEY J. A hybrid causal search algorithm for latent variable models[C]//Proceedings of the Conference on probabilistic graphical models. [S. l. ]: PMLR, 2016: 368-379. [109] JABBARI F, RAMSEY J, SPIRTES P, et al. Discovery of causal models that contain latent variables through Bayesian scoring of independence constraints[C]//Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Berlin: Springer, 2017: 142-157. [110] CHOBTHAM K, CONSTANTINOU A C. Bayesian network structure learning with causal effects in the presence of latent variables[C]//Proceedings of the International Conference on Probabilistic Graphical Models. [S. l. ]: PMLR, 2020: 101-112. [111] BERNSTEIN D, SAEED B, SQUIRES C, et al. Ordering-based causal structure learning in the presence of latent variables[C]// Proceedings of the International Conference on Artificial Intelligence and Statistics. [S. l. ]: PMLR, 2020: 4098-4108. [112] CHOBTHAM K, CONSTANTINOU A C, KITSON N K. Hybrid Bayesian network discovery with latent variables by scoring multiple interventions[J]. Data Mining and Knowledge Discovery, 2023, 37(1): 476-520. doi: 10.1007/s10618-022-00882-9 [113] WANG L, YANG H Y, ZHANG S W, et al. Intuitionistic fuzzy dynamic Bayesian network and its application to terminating situation assessment[J]. Procedia Computer Science, 2019, 154: 238-248. doi: 10.1016/j.procs.2019.06.036 [114] 晏师励, 李德华. 基于动态贝叶斯网络的空战目标威胁等级评估[J]. 计算机与数字工程, 2015, 43(12): 2150-2154. doi: 10.3969/j.issn.1672-9722.2015.12.012YAN S L, LI D H. Threat level assessment of the air combat target based on DBN[J]. Computer & Digital Engineering, 2015, 43(12): 2150-2154(in Chinese). doi: 10.3969/j.issn.1672-9722.2015.12.012 [115] HUANG C Q, DONG K S, HUANG H Q, et al. Autonomous air combat maneuver decision using Bayesian inference and moving horizon optimization[J]. Journal of Systems Engineering and Electronics, 2018, 29(1): 86-97. [116] BOURS M J. Bayes’ rule in diagnosis[J]. Journal of Clinical Epidemiology, 2021, 131: 158-160. doi: 10.1016/j.jclinepi.2020.12.021 [117] 刘桂芬, 孟海英, 张岩波. Bayes线性混合效应模型多中心临床试验应用[J]. 中国卫生统计, 2005, 22(4): 200-203. doi: 10.3969/j.issn.1002-3674.2005.04.003LIU G F, MENG H Y, ZHANG Y B. Bayes analysis of mixed model applied in amulti-center trials[J]. Chinese Journal of Health Statistics, 2005, 22(4): 200-203(in Chinese). doi: 10.3969/j.issn.1002-3674.2005.04.003 [118] 周旭毓, 方积乾. Meta分析中随机效应模型的Gibbs抽样及其应用[J]. 中国卫生统计, 2002, 19(4): 204-207. doi: 10.3969/j.issn.1002-3674.2002.04.004ZHOU X Y, FANG J Q. Gibbs sampling in random effects model for meta-analysis with application[J]. Chinese Journal of Health Statistics, 2002, 19(4): 204-207(in Chinese). doi: 10.3969/j.issn.1002-3674.2002.04.004 [119] WIENS J, WALLACE B C. Special issue on machine learning for health and medicine[J]. Machine Learning, 2016, 102(3): 305-307. doi: 10.1007/s10994-015-5533-9 [120] LIBBRECHT M W, NOBLE W S. Machine learning applications in genetics and genomics[J]. Nature Reviews Genetics, 2015, 16(6): 321-332. doi: 10.1038/nrg3920 [121] ALIZADEHSANI R, ABDAR M, JALALI S M J, et al. Comparing the performance of feature selection algorithms for wart treatment selection[C]//Proceedings of the International Workshop on Future Technology. [S.l.: s.n.], 2019: 6-18. [122] PARMAR C, GROSSMANN P, RIETVELD D, et al. Radiomic machine-learning classifiers for prognostic biomarkers of head and neck cancer[J]. Frontiers in Oncology, 2015, 5: 272. [123] CHEN P, WU K Y, GHATTAS O. Bayesian inference of heterogeneous epidemic models: application to COVID-19 spread accounting for long-term care facilities[J]. Computer Methods in Applied Mechanics and Engineering, 2021, 385: 114020. doi: 10.1016/j.cma.2021.114020 [124] NASRHEIDARABADI N, HAKEMI L, KOLIVAND P, et al. Comparing performances of intelligent classifier algorithms for predicting type of pain in patients with spinal cord injury[J]. Electronic Physician, 2017, 9(7): 4847-4852. doi: 10.19082/4847 [125] YOUSEFI L, TUCKER A, AL-LUHAYBI M, et al. Predicting disease complications using a stepwise hidden variable approach for learning dynamic Bayesian networks[C]//Proceedings of the IEEE 31st International Symposium on Computer-Based Medical Systems. Piscataway: IEEE Press, 2018: 106-111. [126] KHADEMI M, NEDIALKOV N S. Probabilistic graphical models and deep belief networks for prognosis of breast cancer[C]//Proceedings of the IEEE 14th International Conference on Machine Learning and Applications. Piscataway: IEEE Press, 2015: 727-732. [127] ALJAWAD D A, ALQAHTANI E, AL-KUHAILI G, et al. Breast cancer surgery survivability prediction using Bayesian network and support vector machines[C]//Proceedings of the International Conference on Informatics, Health & Technology. Piscataway: IEEE Press, 2017: 1-6. [128] REFAI A, MEROUANI H F, AOURAS H. Maintenance of a Bayesian network: application using medical diagnosis[J]. Evolving Systems, 2016, 7(3): 187-196. doi: 10.1007/s12530-016-9146-8 [129] BANDYOPADHYAY S, WOLFSON J, VOCK D M, et al. Data mining for censored time-to-event data: a Bayesian network model for predicting cardiovascular risk from electronic health record data[J]. Data Mining and Knowledge Discovery, 2015, 29(4): 1033-1069. doi: 10.1007/s10618-014-0386-6 [130] BUKHANOV N, BALAKHONTCEVA M, KOVALCHUK S, et al. Multiscale modeling of comorbidity relations in hypertensive outpatients[J]. Procedia Computer Science, 2017, 121: 446-450. doi: 10.1016/j.procs.2017.11.060 [131] SA-NGAMUANG C, HADDAWY P, LUVIRA V, et al. Accuracy of dengue clinical diagnosis with and without NS1 antigen rapid test: comparison between human and Bayesian network model decision[J]. PLoS Neglected Tropical Diseases, 2018, 12(6): e0006573. doi: 10.1371/journal.pntd.0006573 [132] SHI H Y, LUO G P, ZHENG H W, et al. Coupling the water-energy-food-ecology nexus into a Bayesian network for water resources analysis and management in the Syr Darya River Basin[J]. Journal of Hydrology, 2020, 581: 124387. doi: 10.1016/j.jhydrol.2019.124387 [133] HUI E, STAFFORD R, MATTHEWS I M, et al. Bayesian networks as a novel tool to enhance interpretability and predictive power of ecological models[J]. Ecological Informatics, 2022, 68: 101539. doi: 10.1016/j.ecoinf.2021.101539 [134] CHATRABGOUN O, HOSSEINIAN-FAR A, DANESHKHAH A. Constructing gene regulatory networks from microarray data using non-Gaussian pair-copula Bayesian networks[J]. Journal of Bioinformatics and Computational Biology, 2020, 18(4): 2050023. doi: 10.1142/S0219720020500237 [135] YU H Y, KHAN F, GARANIYA V. Modified independent component analysis and Bayesian network-based two-stage fault diagnosis of process operations[J]. Industrial & Engineering Chemistry Research, 2015, 54(10): 2724-2742. [136] JIANG Q C, HUANG B, DING S X, et al. Bayesian fault diagnosis with asynchronous measurements and its application in networked distributed monitoring[J]. IEEE Transactions on Industrial Electronics, 2016, 63(10): 6316-6324. doi: 10.1109/TIE.2016.2577545 [137] CHANG Y J, CHEN G M, WU X F, et al. Failure probability analysis for emergency disconnect of deepwater drilling riser using Bayesian network[J]. Journal of Loss Prevention in the Process Industries, 2018, 51: 42-53. doi: 10.1016/j.jlp.2017.11.005 [138] WANG Z W, WANG Z W, GU X W, et al. Feature selection based on Bayesian network for chiller fault diagnosis from the perspective of field applications[J]. Applied Thermal Engineering, 2018, 129: 674-683. doi: 10.1016/j.applthermaleng.2017.10.079 [139] YEO C, BHANDARI J, ABBASSI R, et al. Dynamic risk analysis of offloading process in floating liquefied natural gas (FLNG) platform using Bayesian network[J]. Journal of Loss Prevention in the Process Industries, 2016, 41: 259-269. doi: 10.1016/j.jlp.2016.04.002 -

下载:

下载: