Singing voice separation method using multi-stage progressive gated convolutional network

-

摘要:

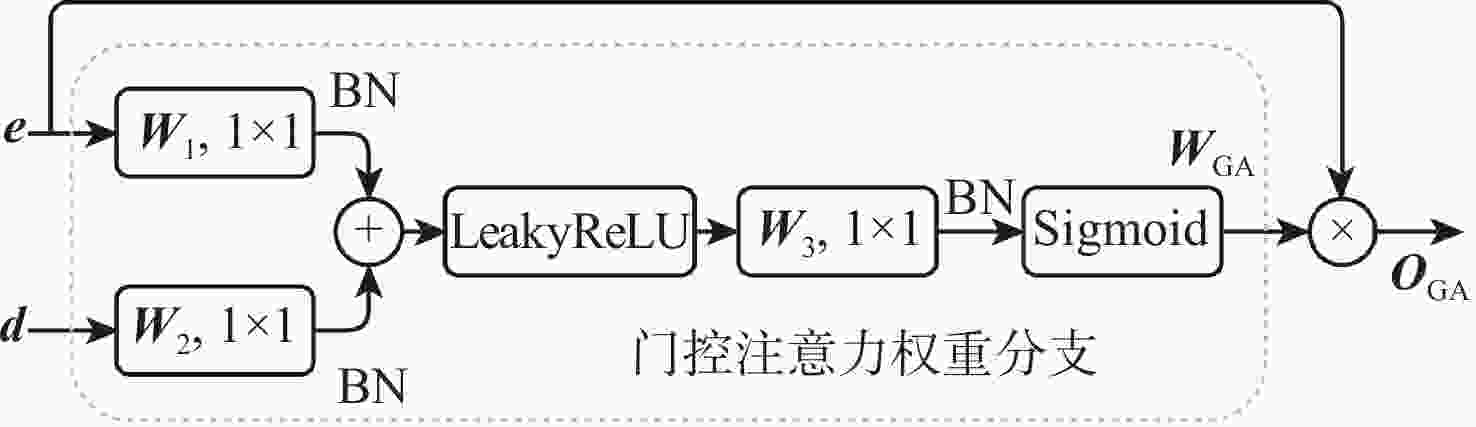

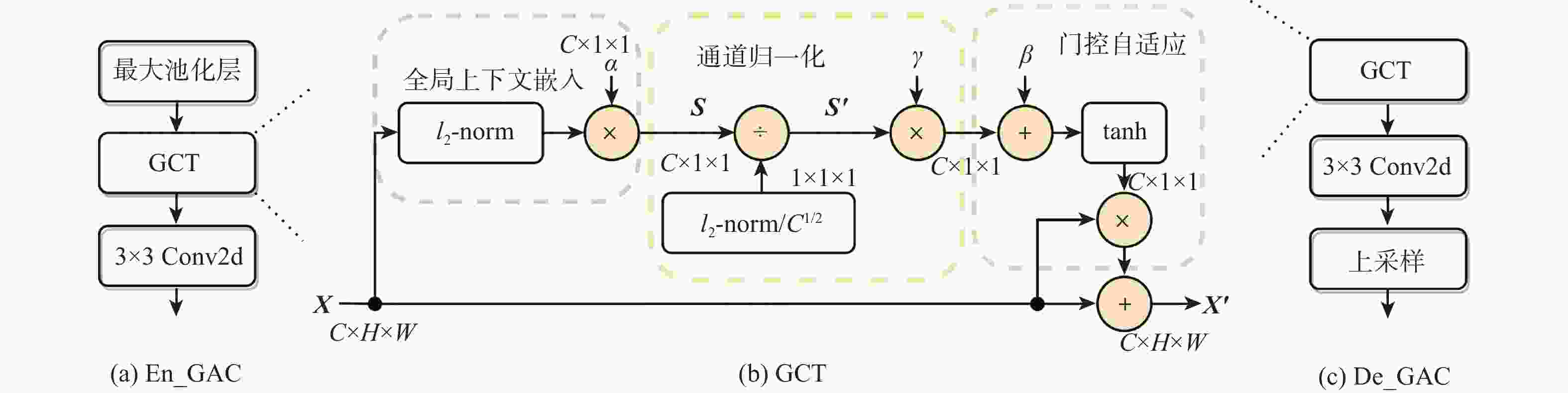

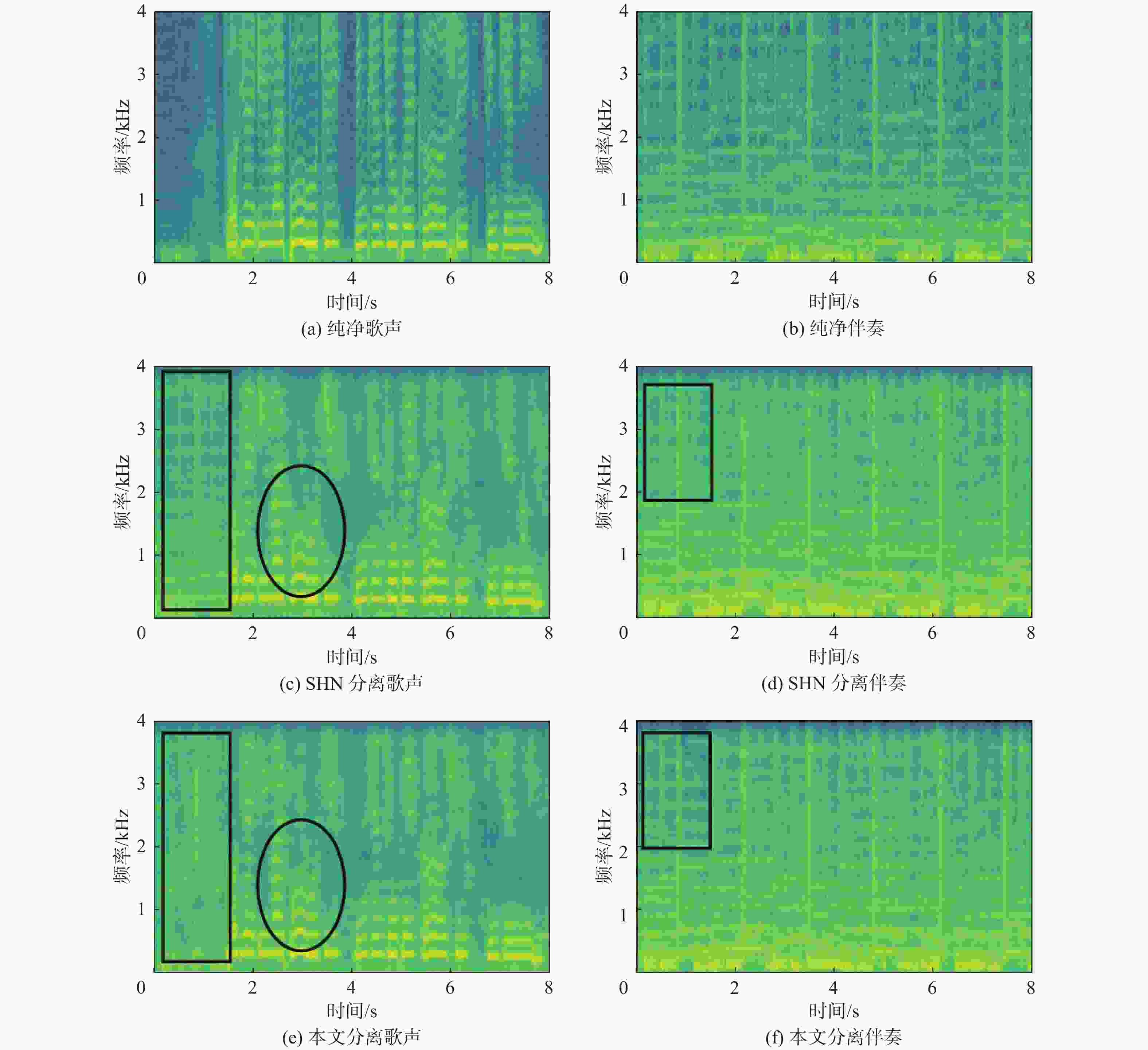

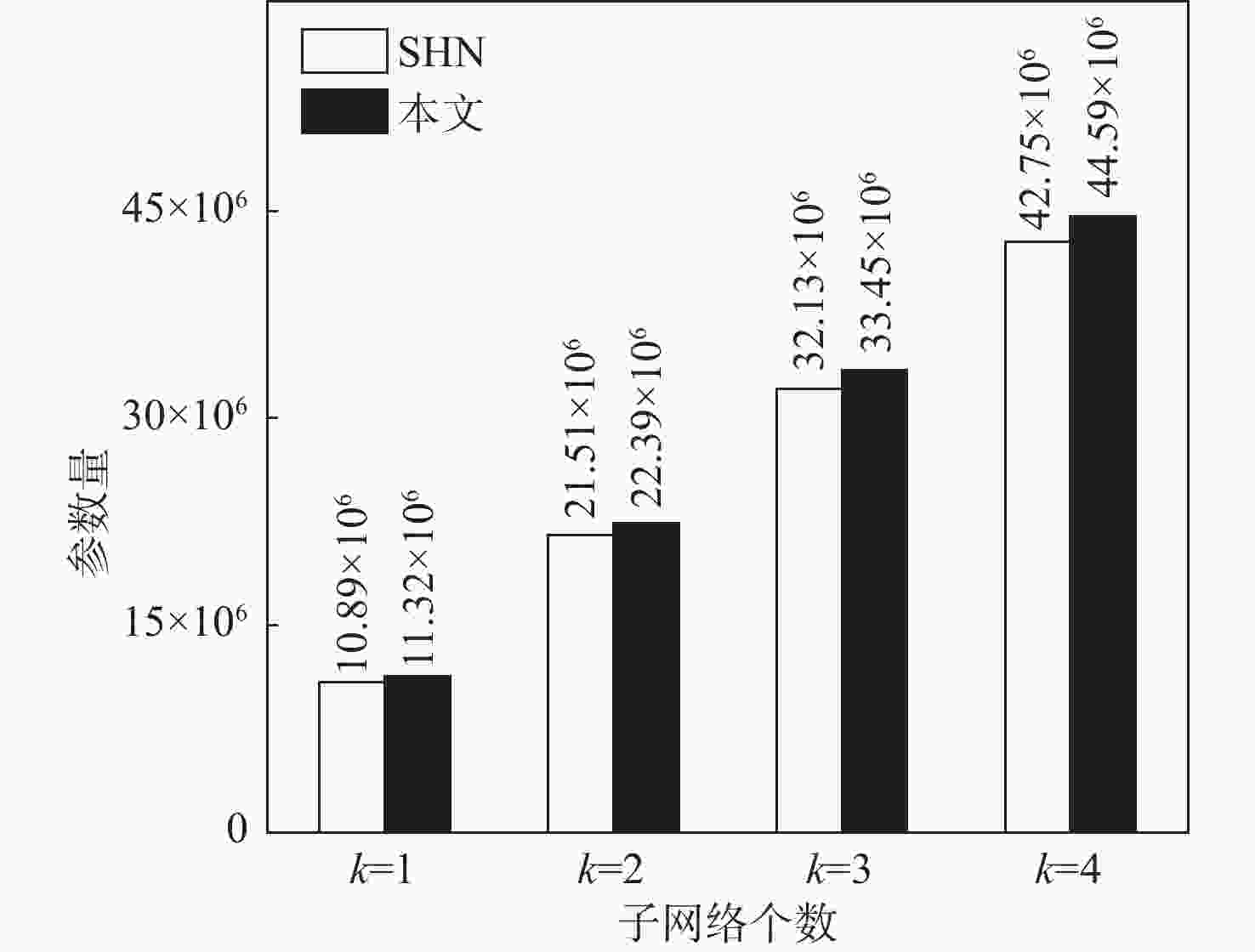

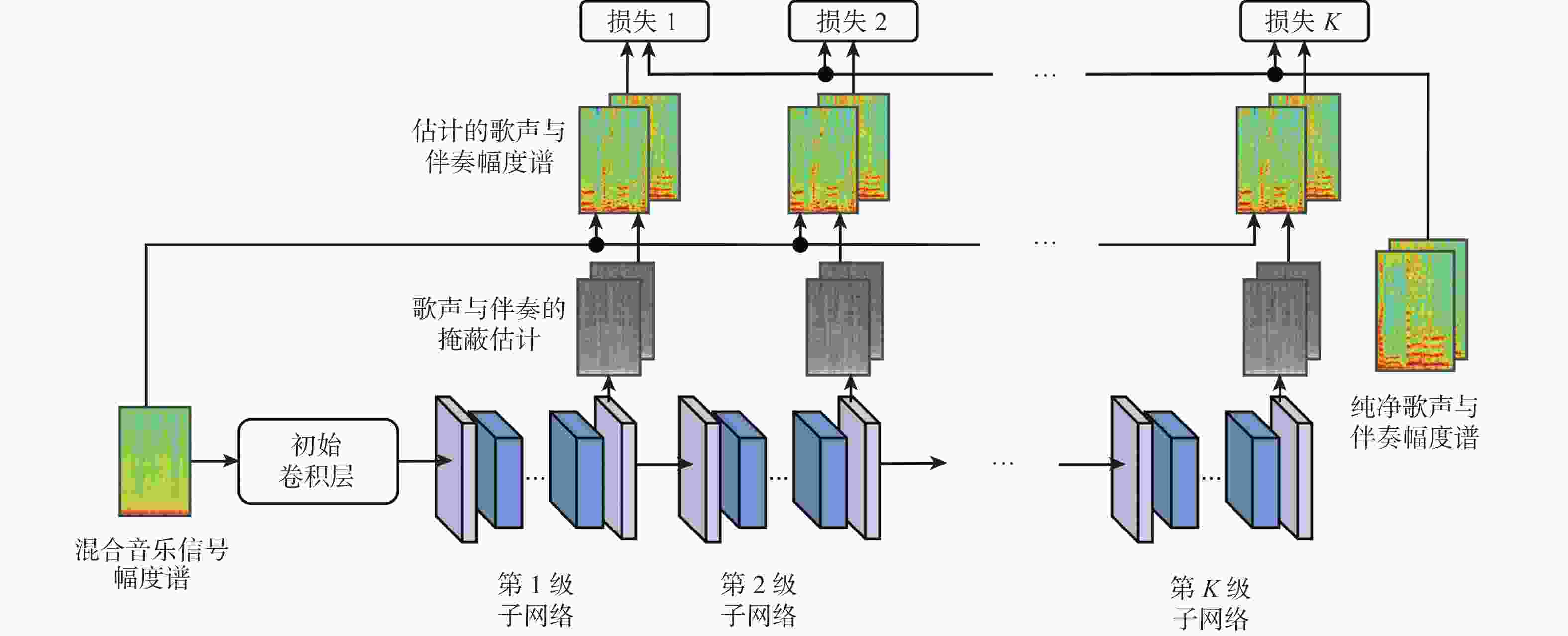

针对目前基于卷积神经网络(CNN)的歌声分离方法对高低层特征融合时存在语义差异,以及忽视语音特征在通道维度上潜在价值的问题,提出了一种堆叠式的多级渐进式门控卷积网络来实现歌声分离。在每级子网络中设计一种门控自适应卷积(GAC)单元来充分学习并提取歌曲的时频特征,增强特征通道间的竞争合作关系;为减少浅层与深层网络信息融合时的语义误差,在子网络的编解码层间引入门控注意力机制;在各级子网络间提出一种监督注意力(SA)模块来选择性地传递有效信息流,并实现多级网络的渐进式学习。在公开的2个数据集上进行综合对比实验,结果表明:所提方法相比于近年来的代表性模型,在分离歌声与伴奏时均具有一定的优越性。

Abstract:To solve the problems that current singing voice separation algorithms based on convolutional neural network (CNN) have semantic differences in the fusion of high- and low-layer features and ignore the potential value of speech features in channel dimension, this paper proposed a stacked multi-stage progressive gated convolutional network to achieve singing voice separation. Firstly, a gated adaptive convolution (GAC) unit was designed in each level of subnetwork to fully learn and extract the time-frequency features of songs and enhance competition and cooperation between the feature channels. Then, to reduce the semantic errors in the fusion of shallow and deep network information, a gated attention mechanism was introduced between the codec layers of the subnetwork. Finally, supervised attention (SA) was proposed for different levels of subnetwork to selectively deliver effective information flow and realize progressive learning of multi-stage networks. Comprehensive comparative experiments were carried out on a large dataset and a small dataset publicly available. The results show that compared with the representative models in recent years, the algorithm has certain advantages in separating singing voice and background accompaniment.

-

Key words:

- singing voice separation /

- neural network /

- multi-stage progressive /

- adaptive /

- supervised attention

-

表 1 子网络结构参数

Table 1. Subnetwork structure parameters

层结构 层参数 层输入维度 层输出维度 初始卷积层 $d_c = 7$,1,1,$s_c = 1$ 512×64×1 512×64×64 En_GAC_1 $ \varepsilon {\text{ = }}10^{-5} $,$d_c = 3$,$s_c = 1$,$p = 2$ 512×64×64 512×32×64 En_GAC_2 $ \varepsilon {\text{ = }}10^{-5} $,$d_c = 3$,$s_c = 1$,$p = 2$ 256×32×64 128×16×128 En_GAC_3 $ \varepsilon {\text{ = }}10^{-5} $,$d_c = 3$,$s_c = 1$,$p = 2$ 128×16×128 64×8×192 En_GAC_4 $ \varepsilon {\text{ = }}10^{-5} $,$d_c = 3$,$s_c = 1$,$p = 2$ 64×8×192 32×4×256 GCT+Conv2d $d_c = 3$,$s_c = 1$,$ \varepsilon {\text{ = }}10^{-5} $ 32×4×256 32×4×320 De_GAC_4 $d_c = 3$,$s_c = 1$,$ \varepsilon {\text{ = }}10^{-5} $ 32×4×320 64×8×256 De_GAC_3 $d_c = 3$,$s_c = 1$,$ \varepsilon {\text{ = }}10^{-5} $ 64×8×256 128×16×192 De_GAC_2 $d_c = 3$,$s_c = 1$,$ \varepsilon {\text{ = }}10^{-5} $ 128×16×192 256×32×128 De_GAC_1 $d_c = 3$,$s_c = 1$,$ \varepsilon {\text{ = }}10^{-5} $ 256×32×128 512×64×64 输出卷积层 $d_c = 3$,1,$s_c = 1$ 512×64×64 512×64×64 Conv2d_1 $d_c = 1$,$s_c = 1$ 512×64×64 512×64×2 Conv2d_2 $d_c = 1$,$s_c = 1$ 512×64×2 512×64×64 Conv2d_3 $d_c = 1$,$s_c = 1$ 512×64×64 512×64×64 SA $d_c = 1$,$s_c = 1$ 512×64×64 512×64×64 表 2 子网络个数对网络分离性能的影响

Table 2. Effect of number of subnetworks on network separation performance

dB 子网络数量 GNSDR GSIR GSAR 歌声 伴奏 歌声 伴奏 歌声 伴奏 1 12.02 10.63 18.41 14.76 13.55 12.83 2 12.14 10.67 18.62 14.78 13.61 12.96 3 12.21 10.68 18.76 14.81 13.68 13.17 4 12.24 10.73 18.85 14.88 13.68 13.31 5 12.22 10.70 18.86 14.86 13.60 13.30 6 12.13 10.65 18.71 14.72 13.50 13.11 表 3 不同模块对网络分离性能的影响

Table 3. Effects of different modules on network separation performance

dB 网络模型 GNSDR GSIR GSAR 歌声 伴奏 歌声 伴奏 歌声 伴奏 SHN[13] 10.51 9.88 16.01 14.24 12.53 12.36 GAC-SHN 11.98 10.65 18.35 14.73 13.45 12.78 GA-SHN 10.89 10.32 17.64 14.36 12.76 12.66 GAC-GA-SHN 12.01 10.69 18.25 14.78 13.66 12.91 GAC-GA-SA-SHN(本文) 12.24 10.73 18.85 14.88 13.64 13.31 表 4 MIR-1K数据集上不同方法的分离性能比较[9,14-16,19-21]

Table 4. Comparison of separation performance of different methods on MIR-1K dataset[9,14-16,19-21]

dB 模型 GNSDR GSIR GSAR 歌声 伴奏 歌声 伴奏 歌声 伴奏 MLRR[19] 3.85 4.19 5.63 7.80 10.70 8.22 DRNN[20] 7.45 13.08 9.68 ModGD[21] 7.50 13.73 9.45 U-Net[9] 7.43 7.45 11.79 11.43 10.42 10.38 U-Net-SE[16] 7.49 7.46 11.78 11.42 10.38 10.41 FC-Net[16] 10.85 9.91 16.95 14.09 12.54 12.64 TSMS-G-CS[16] 10.93 9.96 17.02 14.13 12.59 12.68 SHN[15] 10.51 9.88 16.01 14.24 12.53 12.36 SE-SHN [16] 10.48 9.86 15.99 14.21 12.52 12.35 SA-SHN[14] 11.66 10.60 17.65 14.92 13.38 13.17 P-SHN[15] 10.83 9.89 16.54 14.01 12.67 12.65 本文 12.24 10.73 18.85 14.88 13.68 13.31 表 5 MIR-1K数据集中5首音乐片段下的歌声与伴奏的MOS得分

Table 5. MOS scores of singing voice and accompaniment for 5 music segments in MIR-1K dataset

歌曲名称 SHN 本文 歌声 伴奏 歌声 伴奏 男 女 Avg 男 女 Avg 男 女 Avg 男 女 Avg annar_5_03 3.32 3.31 3.31 3.43 3.42 3.42 3.51 3.53 3.52 3.45 3.42 3.43 Bobon_2_06 3.25 3.28 3.26 3.39 3.4 3.39 3.42 3.43 3.42 3.4 3.41 3.40 Kenshin_3_08 3.26 3.23 3.24 3.46 3.42 3.44 3.36 3.34 3.35 3.43 3.47 3.45 Khair_6_06 3.36 3.34 3.35 3.32 3.36 3.33 3.48 3.5 3.49 3.32 3.33 3.32 Yifen_1_07 3.38 3.40 3.39 3.43 3.45 3.44 3.56 3.58 3.57 3.42 3.45 3.43 -

[1] RAFII Z, PARDO B. Repeating pattern extraction technique (REPET): a simple method for music/voice separation[J]. IEEE Transactions on Audio, Speech, and Language Processing, 2013, 21(1): 73-84. doi: 10.1109/TASL.2012.2213249 [2] HUANG P S, CHEN S D, SMARAGDIS P, et al. Singing-voice separation from monaural recordings using robust principal component analysis[C]//Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing. Piscataway: IEEE Press, 2012: 57-60. [3] GRAIS E M, ERDOGAN H. Single channel speech music separation using nonnegative matrix factorization with sliding windows and spectral masks[C]//Proceedings of the Interspeech 2011. Copenhagen: ISCA, 2011: 1773-1776. [4] UHLICH S, GIRON F, MITSUFUJI Y. Deep neural network based instrument extraction from music[C]//Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing. Piscataway: IEEE Press, 2015: 2135-2139. [5] SPRECHMANN P, BRUNA J, LECUN Y. Audio source separation with discriminative scattering networks[C]//Proceedings of the Latent Variable Analysis and Signal Separation. Berlin: Springer, 2015: 259-267. [6] 张天骐, 熊梅, 张婷, 等. 结合区分性训练深度神经网络的歌声与伴奏分离方法[J]. 声学学报, 2019, 44(3): 393-400.ZHANG T Q, XIONG M, ZHANG T, et al. A separation method of singing and accompaniment combining discriminative training deep neural network[J]. Acta Acustica, 2019, 44(3): 393-400(in Chinese). [7] CHEN J T, WANG D L. Long short-term memory for speaker generalization in supervised speech separation[J]. The Journal of the Acoustical Society of America, 2017, 141(6): 4705. doi: 10.1121/1.4986931 [8] 张天. 单通道音乐信号中的人声伴奏分离方法研究[D]. 重庆: 重庆邮电大学, 2020: 43-57.ZHANG T. Research on separation method of vocal accompaniment in single channel music signal[D]. Chongqing: Chongqing University of Posts and Telecommunications, 2020: 43-57(in Chinese). [9] JANSSON A, HUMPHREY E, MONTECCHIO N, et al. Singing voice separation with deep U-Net convolutional networks[C]//Proceedings of the 18th International Society for Music Information Retrieval Conference. [S. l. ]: DBLP, 2017: 745-751. [10] STOLLER D, EWERT S, DIXON S. Wave-U-Net: a multi-scale neural network for end-to-end audio source separation[EB/OL]. (2018-06-08)[2023-06-01]. http://arxiv.org/abs/1806.03185v1. [11] DÉFOSSEZ A, USUNIER N, BOTTOU L, et al. Demucs: deep extractor for music sources with extra unlabeled data remixed[EB/OL]. (2019-09-03)[2023-06-01]. http://arxiv.org/abs/1909.01174v1. [12] IBTEHAZ N, RAHMAN M S. MultiResUNet: rethinking the U-Net architecture for multimodal biomedical image segmentation[J]. Neural Networks, 2020, 121: 74-87. doi: 10.1016/j.neunet.2019.08.025 [13] PARK S, KIM T, LEE K, et al. Music source separation using stacked hourglass networks[EB/OL]. (2018-06-22)[2023-06-01]. http://arxiv.org/abs/1805.08559v2. [14] YUAN W T, WANG S B, LI X R, et al. A skip attention mechanism for monaural singing voice separation[J]. IEEE Signal Processing Letters, 2019, 26(10): 1481-1485. doi: 10.1109/LSP.2019.2935867 [15] BHATTARAI B, PANDEYA Y R, LEE J. Parallel stacked hourglass network for music source separation[J]. IEEE Access, 2020, 8: 206016-206027. doi: 10.1109/ACCESS.2020.3037773 [16] 买峰. 基于深度卷积神经网络的音乐源分离算法及其应用研究[D]. 成都: 电子科技大学, 2021: 20-70.MAI F. Research on music source separation algorithm based on deep convolution neural network and its application[D]. Chengdu: University of Electronic Science and Technology of China, 2021: 20-70(in Chinese). [17] SUBAKAN C, RAVANELLI M, CORNELL S, et al. Attention is all you need in speech separation[C]//Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing. Piscataway: IEEE Press, 2021: 21-25. [18] YANG Z X, ZHU L C, WU Y, et al. Gated channel transformation for visual recognition[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 11791-11800. [19] YANG Y H. Low-rank representation of both singing voice and music accompaniment via learned dictionaries[C]//Proceedings of the 14th International Society for Music Information Retrieval Conference. Curitiba: [s. n. ], 2013: 427-432. [20] HUANG P S, KIM M, HASEGAWA-JOHNSON M, et al. Joint optimization of masks and deep recurrent neural networks for monaural source separation[J]. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2015, 23(12): 2136-2147. doi: 10.1109/TASLP.2015.2468583 [21] SEBASTIAN J, MURTHY H A. Group delay based music source separation using deep recurrent neural networks[C]//Proceedings of the International Conference on Signal Processing and Communications. Piscataway: IEEE Press, 2016: 1-5. [22] DÉFOSSEZ A, USUNIER N, BOTTOU L, et al. Music source separation in the waveform domain[EB/OL]. (2021-04-28)[2023-06-01]. http://arxiv.org/abs/1911.13254v2. [23] YUAN W T, DONG B F, WANG S B, et al. Evolving multi-resolution pooling CNN for monaural singing voice separation[J]. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2021, 29: 807-822. doi: 10.1109/TASLP.2021.3051331 [24] TAKAHASHI N, MITSUFUJI Y, TAKAHASHI N, et al. D3Net: densely connected multidilated DenseNet for music source separation[EB/OL]. (2021-05-27)[2023-06-01]. http://arxiv.org/abs/2010.01733v4. [25] LAI W H, WANG S L. RPCA-DRNN technique for monaural singing voice separation[J]. EURASIP Journal on Audio, Speech, and Music Processing, 2022, 2022: 4. doi: 10.1186/s13636-022-00236-9 [26] NI X, REN J. FC-U2-Net: a novel deep neural network for singing voice separation[J]. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2022, 30: 489-494. doi: 10.1109/TASLP.2022.3140561 -

下载:

下载: