Analysis of image and text sentiment method based on joint and interactive attention

-

摘要:

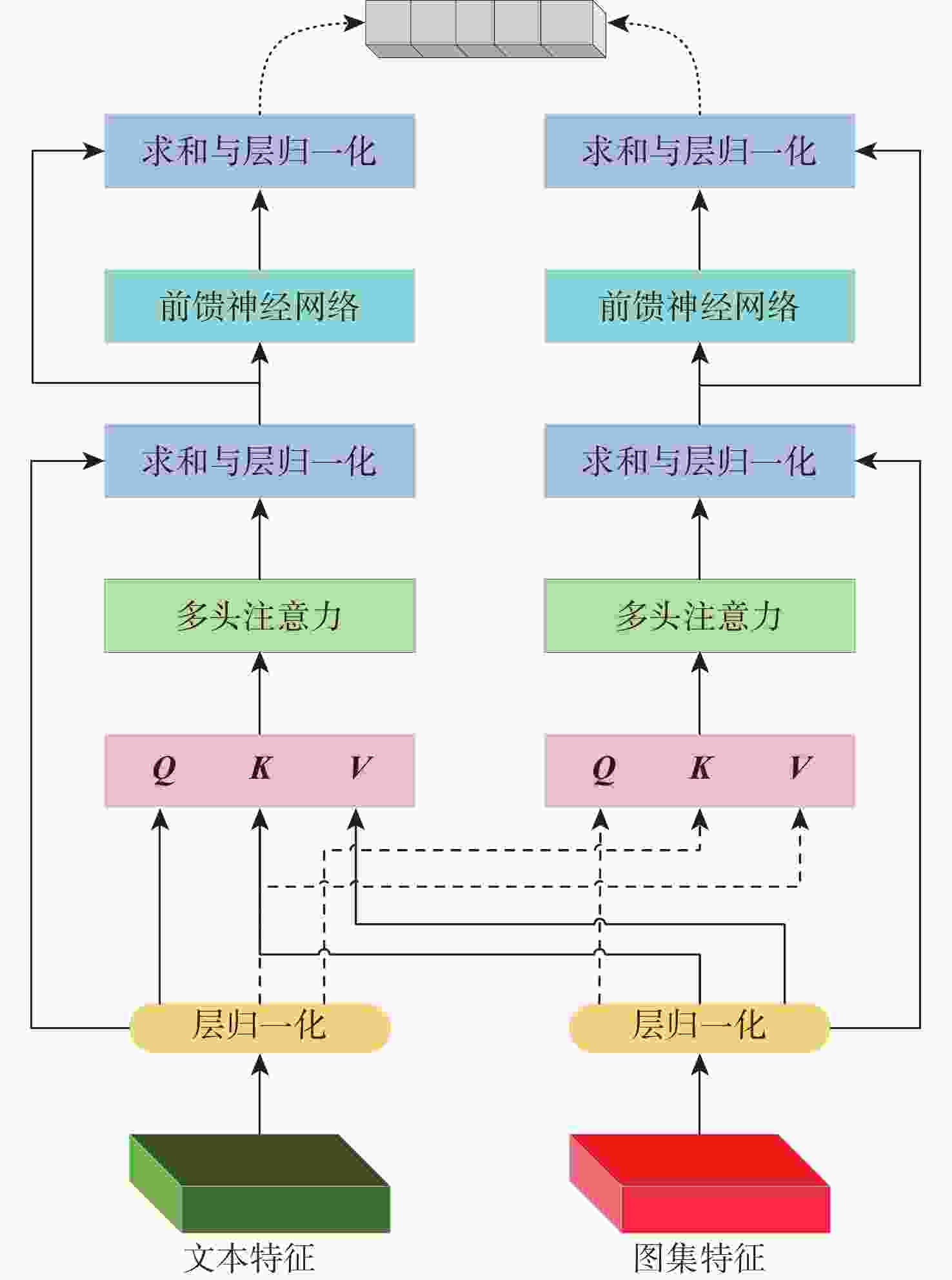

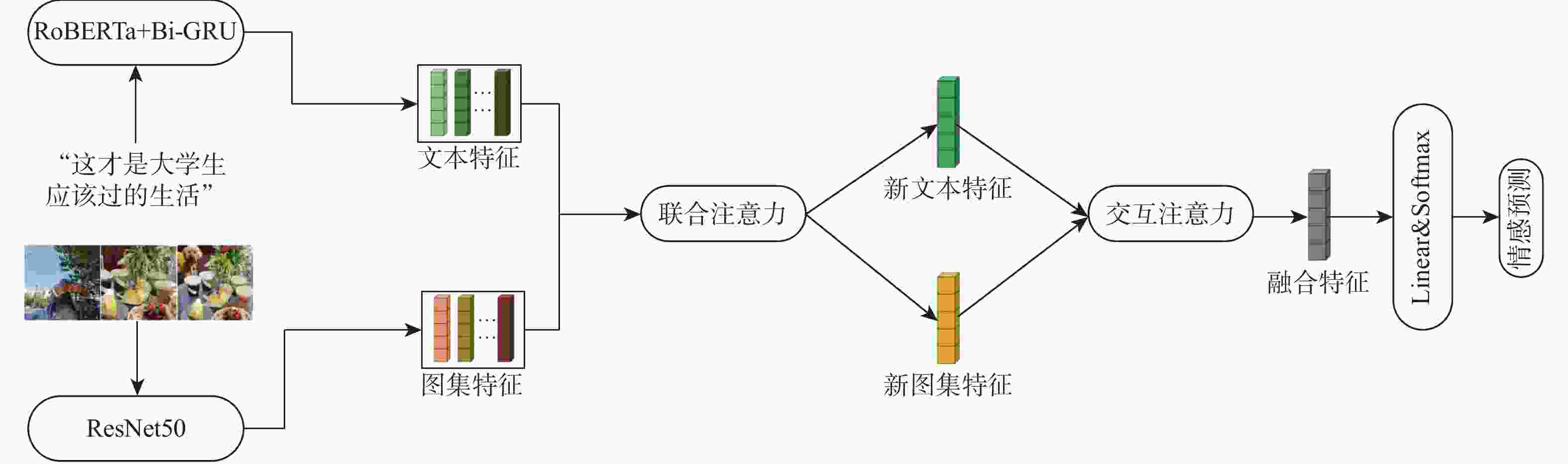

社交媒体中的图文情感对于引导舆论走向具有重要意义,越来越受到自然语言处理(NLP)领域的广泛关注。当前,社交媒体图文情感分析的研究对象主要为单幅图像文本对,针对无时序性及多样性的图集文本对的研究相对较少,为有效挖掘图集中图像与文本之间情感一致性信息,提出基于联合交互注意力的图文情感分析(SA-JIA)方法。该方法使用RoBERTa和双向门控循环单元(Bi-GRU)来提取文本表达特征,使用ResNet50获取图像视觉特征,利用联合注意力来找到图文情感信息表达一致的显著区域,获得新的文本和图像视觉特征,采用交互注意力关注模态间的特征交互,并进行多模态特征融合,进而完成情感分类任务。在IsTS-CN数据集和CCIR20-YQ数据集上进行了实验验证,结果表明:所提方法能够提升社交媒体图文情感分析的性能。

Abstract:The image and text sentiment in social media is an important factor affecting public opinion and is receiving increasing attention in the field of natural language processing (NLP). Currently, the analysis of image and text sentiment in social media has mainly focused on single image and text pairs, while little attention has been given to image and text pairs of atlas that are non-chronological and diverse. To explore the sentiment consistency between images and texts in the atlas, a method for analyzing image and text sentiment in social media based on joint and interactive attention (SA-JIA) was proposed. The method used RoBERTa and bidirectional gated recurrent unit (Bi-GRU) to extract textual expression features and ResNet50 to obtain image visual features. Joint attention was employed to identify salient regions where image and text sentiment align, obtaining new textual and image visual features. Interactive attention was utilized to focus on inter-modal feature interactions and multimodal feature fusion, finally obtaining the sentiment categories. Experimental validation was conducted on the IsTS-CN dataset and the CCIR20-YQ dataset, showing that the proposed method can enhance the performance of analyzing image and text sentiment in social media.

-

表 1 数据集划分

Table 1. Dataset partitioning

数据集 训练集/条 验证集/条 测试集/条 IsTS-CN 8 277 1 035 1 035 CCIR20-YQ 54 044 6 756 6 756 表 2 SA-JIA方法参数设置

Table 2. SA-JIA method parameters setting

学习率 句子最大

长度下降率 注意力

机制头数Loss 隐藏层维度 2×10−5 200 0.5 5 CrossEntropyLoss 200 表 3 IsTS-CN和CCIR20-YQ数据集上的图文情感分析对比实验结果

Table 3. Comparative experimental results of image and text sentiment analysis on IsTS-CN and CCIR20-YQ datasets

模态 方法 P R F1 IsTS-CN CCIR20-YQ IsTS-CN CCIR20-YQ IsTS-CN CCIR20-YQ 文本 Att-Bi-LSTM[25] 0.574 0.691 0.601 0.583 0.566 0.607 BERT[7] 0.617 0.601 0.608 0.634 0.612 0.617 RoBERTa[9] 0.637 0.682 0.638 0.764 0.637 0.708 图文 VistaNet[3] 0.634 0.627 0.626 0.619 0.628 0.623 mBERT[26] 0.733 0.709 0.723 0.702 0.727 0.705 MMBT[15] 0.711 0.732 0.747 0.670 0.722 0.691 EF-CapTrBERT[16] 0.723 0.717 0.720 0.692 0.721 0.703 TIBERT[27] 0.716 0.695 0.726 0.687 0.721 0.692 SA-JIA 0.722 0.741 0.778 0.708 0.734 0.718 表 4 IsTS-CN和CCIR20-YQ数据集上的消融实验结果

Table 4. Ablation experimental results on IsTS-CN and CCIR20-YQ datasets

方法 P R F1 IsTS-CN CCIR20-YQ IsTS-CN CCIR20-YQ IsTS-CN CCIR20-YQ JIA-JA 0.670 0.738 0.724 0.671 0.611 0.661 JIA-IA 0.649 0.718 0.614 0.611 0.602 0.608 SA-JIA 0.722 0.741 0.778 0.708 0.734 0.718 -

[1] XIA E, YUE H, LIU H F. Tweet sentiment analysis of the 2020 U. S. presidential election[C]//Proceedings of the 30th Web Conference . New York: ACM, 2021. [2] BONHEME L, GRZES M. SESAM at SemEval-2020 task 8: investigating the relationship between image and text in sentiment analysis of memes[C]//Proceedings of the Fourteenth Workshop on Semantic Evaluation. Barcelona : International Committee for Computational Linguistics, 2020. [3] TRUONG Q T, LAUW H W. VistaNet: visual aspect attention network for multimodal sentiment analysis[C]//Proceedings of the AAAI Conference on Artificial Intelligence. Palo Alto: AAAI Press, 2019, 33(1): 305-312. [4] STONE P J. Thematic text analysis: new agendas for analyzing text content[M]. London: Routledge, 2020: 35-54. [5] WIEBE J M, BRUCE R F, O’HARA T P. Development and use of a gold-standard data set for subjectivity classifications[C]//Proceedings of the 37th Annual Meeting of the Association for Computational Linguistics on Computational Linguistics. Stroudsburg: Association for Computational Linguistics, 1999. [6] BASIRI M E, NEMATI S, ABDAR M, et al. ABCDM: an attention-based bidirectional CNN-RNN deep model for sentiment analysis[J]. Future Generation Computer Systems, 2021, 115: 279-294. [7] DEVLIN J, CHANG M W, LEE K, et al. BERT: pre-training of deep bidirectional transformers for language understanding[C]//Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: human Language Technologies. Stroudsburg: Association for Computational Linguistics, 2019: 4171-4186. [8] DAI J Q, YAN H, SUN T X, et al. Does syntax matter? A strong baseline for aspect-based sentiment analysis with RoBERTa[C]//Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: human Language Technologies. Stroudsburg: Association for Computational Linguistics, 2021: 1816-1829. [9] LIU Y H, OTT M, GOYAL N, et al. RoBERTa: a robustly optimized BERT pretraining approach[EB/OL]. (2019-07-26)[2023-05-20]. http://arxiv.org/abs/1907.11692v1. [10] WU C, XIONG Q Y, GAO M, et al. A relative position attention network for aspect-based sentiment analysis[J]. Knowledge and Information Systems, 2021, 63(2): 333-347. doi: 10.1007/s10115-020-01512-w [11] WU T, PENG J J, ZHANG W Q, et al. Video sentiment analysis with bimodal information-augmented multi-head attention[J]. Knowledge-Based Systems, 2022, 235: 107676. doi: 10.1016/j.knosys.2021.107676 [12] HAN W, CHEN H, GELBUKH A, et al. Bi-bimodal modality fusion for correlation-controlled multimodal sentiment analysis[C]//Proceedings of the International Conference on Multimodal Interaction. New York: ACM, 2021. [13] 王靖豪, 刘箴, 刘婷婷, 等. 基于多层次特征融合注意力网络的多模态情感分析[J]. 中文信息学报, 2022, 36(10): 145-154.WANG J H, LIU Z, LIU T T, et al. Multimodal sentiment analysis based on multilevel feature fusion attention network[J]. Journal of Chinese Information Processing, 2022, 36(10): 145-154(in Chinese). [14] WEN H L, YOU S D, FU Y. Cross-modal context-gated convolution for multi-modal sentiment analysis[J]. Pattern Recognition Letters, 2021, 146: 252-259. doi: 10.1016/j.patrec.2021.03.025 [15] KIELA D, BHOOSHAN S, FIROOZ H, et al. Supervised multimodal bitransformers for classifying images and text[EB/OL]. (2020-11-12)[2023-05-20]. http://arxiv.org/abs/1909.02950. [16] KHAN Z, FU Y. Exploiting BERT for multimodal target sentiment classification through input space translation[C]//Proceedings of the 29th ACM International Conference on Multimedia. New York: ACM, 2021. [17] VASWANI A, SHAZEER N, PARMAR N, et al. Attention is all you need[EB/OL]. (2017-06-12)[2023-05-20]. http://arxiv.org/abs/1706.03762. [18] TSAI Y H, BAI S J, LIANG P P, et al. Multimodal transformer for unaligned multimodal language sequences[EB/OL]. (2019-06-01)[2023-05-20]. http://arxiv.org/abs/1906.00295. [19] CHO K, VAN MERRIENBOER B, GULCEHRE C, et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation[EB/OL]. (2014-06-03)[2023-05-20]. http://arxiv.org/abs/1406.1078v3. [20] DENG J, DONG W, SOCHER R, et al. ImageNet: a large-scale hierarchical image database[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2009: 248-255. [21] HE K M, ZHANG X Y, REN S Q, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 770-778. [22] BENGIO Y, SCHWENK H, SENÉCAL J S, et al. Neural probabilistic language models[M]. Berlin: Springer, 2006: 137-186. [23] BA J L, KIROS J R, HINTON G E. Layer normalization[EB/OL]. (2016-07-21)[2023-05-22]. http://arxiv.org/abs/1607.06450v1. [24] 曹梦丽. 基于辅助信息抽取与融合的社交媒体图文情感分析方法研究[D]. 武汉: 武汉科技大学, 2022.CAO M L. Research on sentiment analysis method of social media graphics and text based on auxiliary information extraction and fusion[D]. Wuhan: Wuhan University of Science and Technology, 2022(in Chinese). [25] ZHOU P, SHI W, TIAN J, et al. Attention-based bidirectional long short-term memory networks for relation classification[C]//Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. Stroudsburg: Association for Computational Linguistics, 2016. [26] YU J F, JIANG J. Adapting BERT for target-oriented multimodal sentiment classification[C]//Proceedings of the 28h International Joint Conference on Artificial Intelligence. Vienna : IJCAI, 2019: 5408-5414. [27] YU B H, WEI J X, YU B, et al. Feature-guided multimodal sentiment analysis towards industry 4.0[J]. Computers and Electrical Engineering, 2022, 100: 107961. doi: 10.1016/j.compeleceng.2022.107961 -

下载:

下载: