-

摘要:

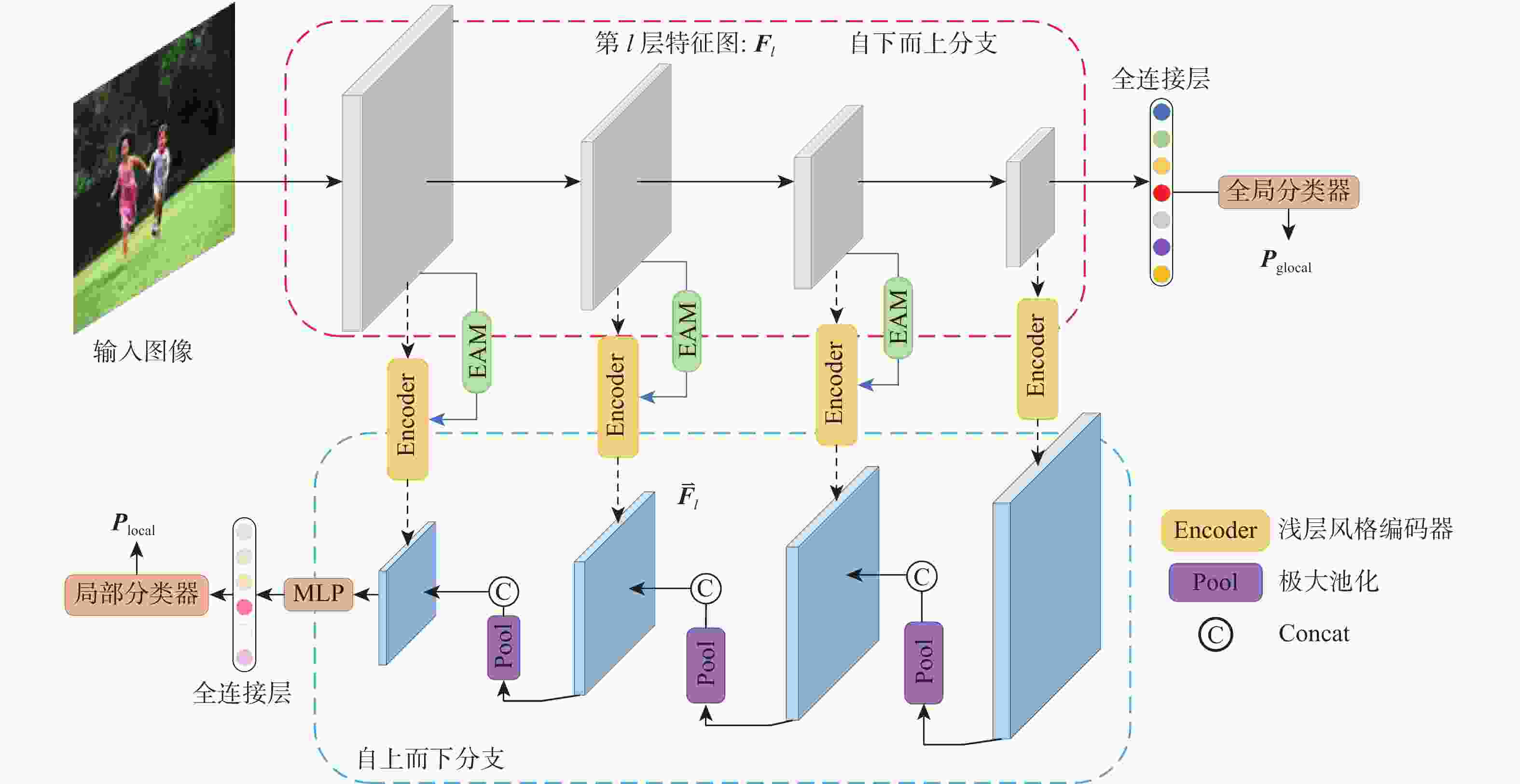

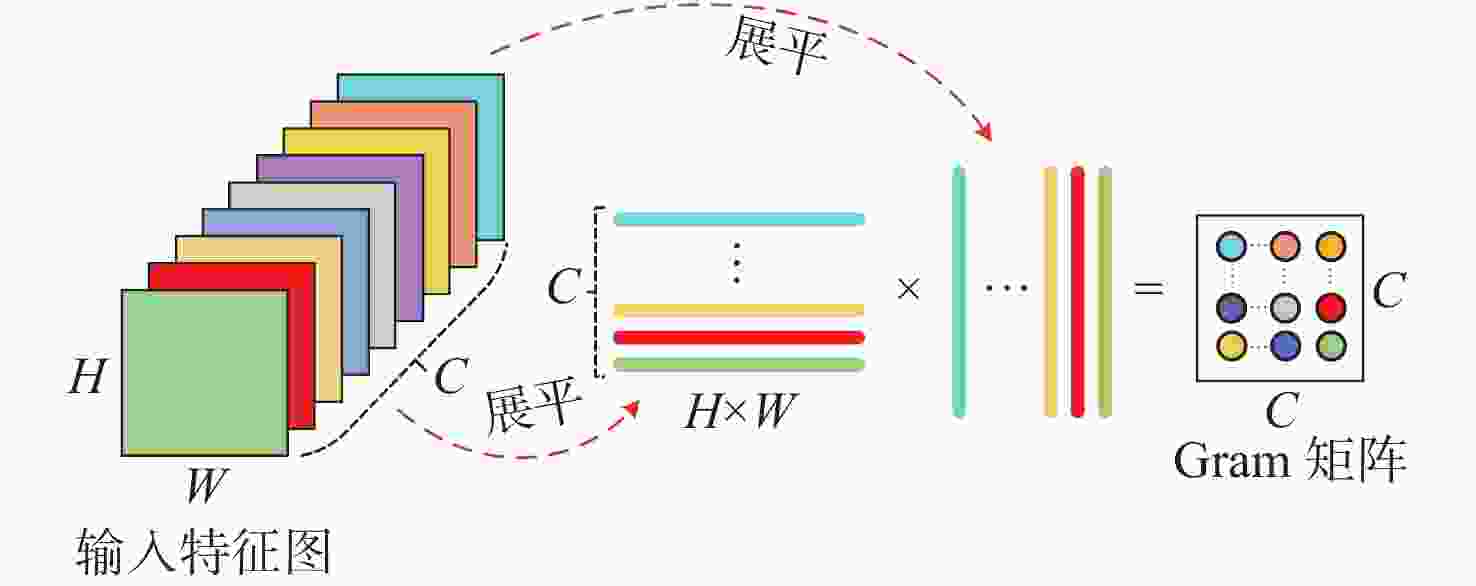

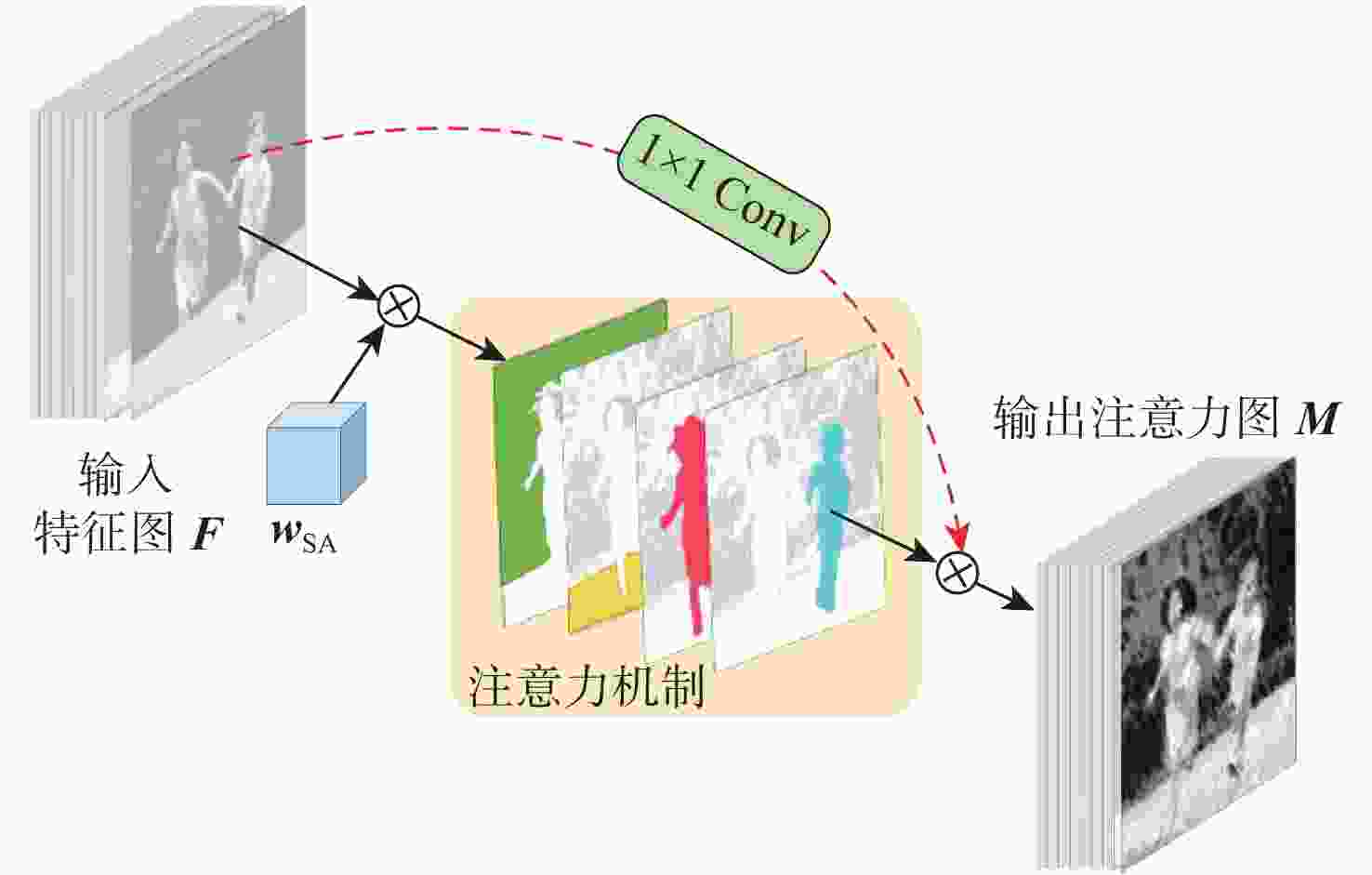

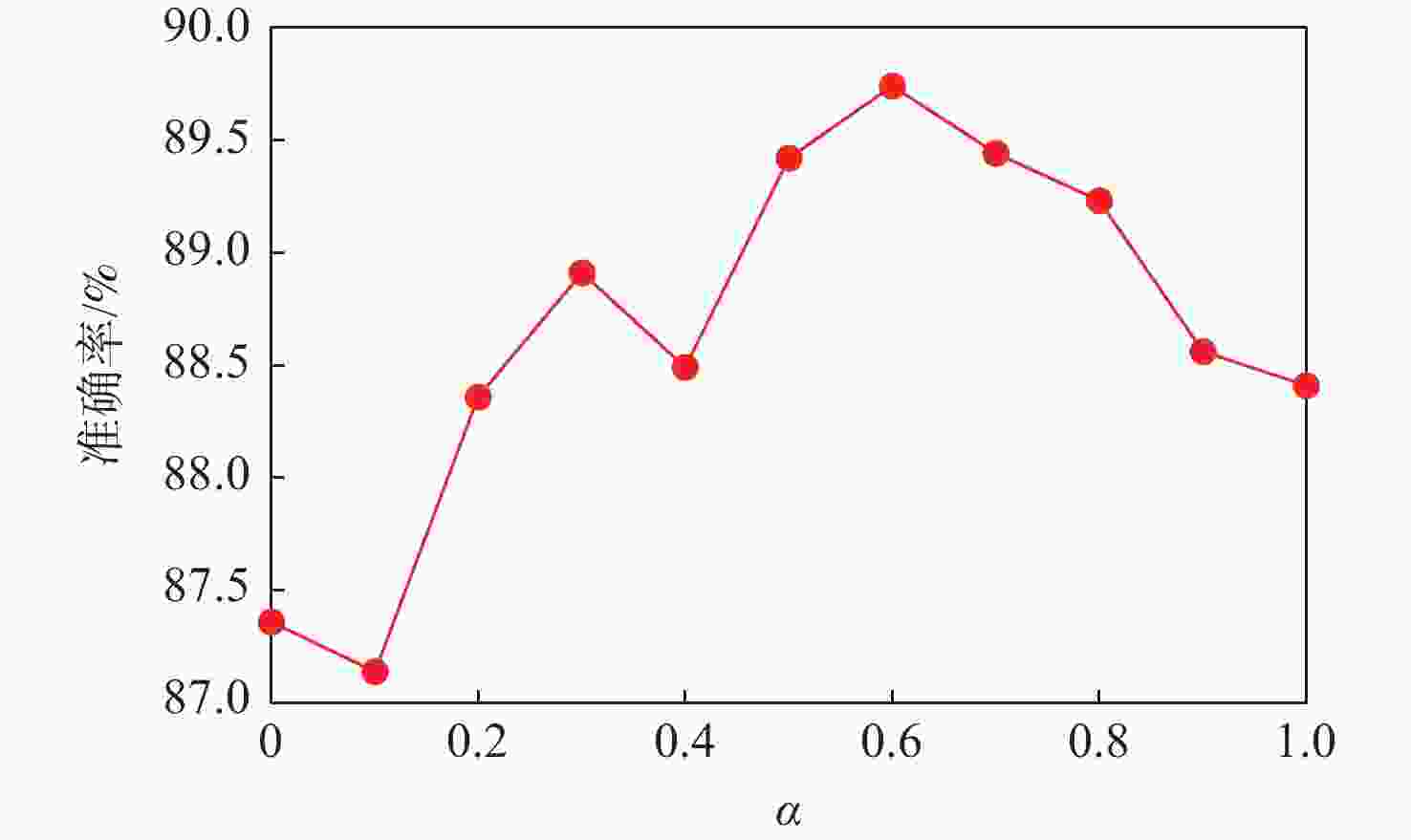

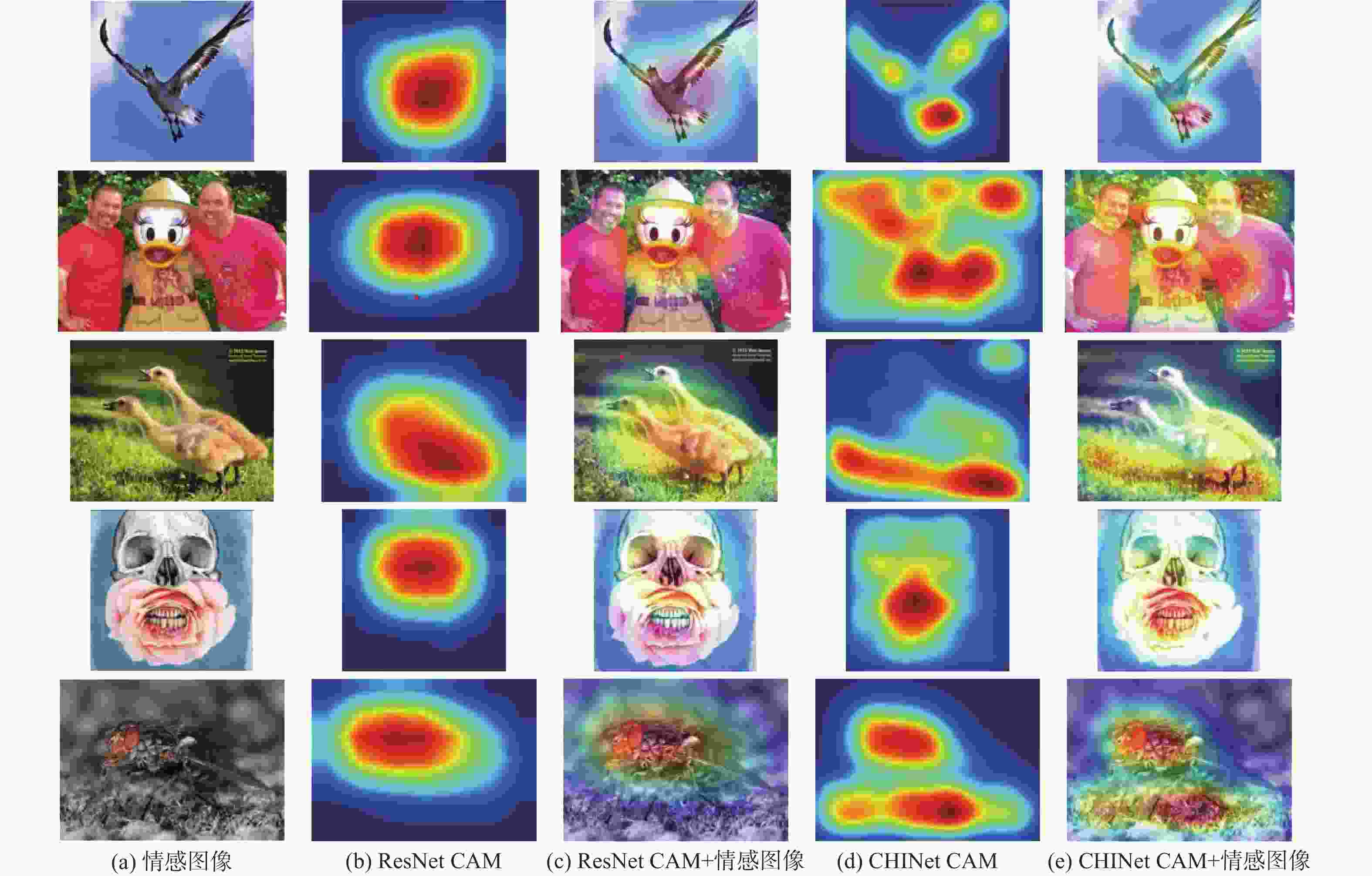

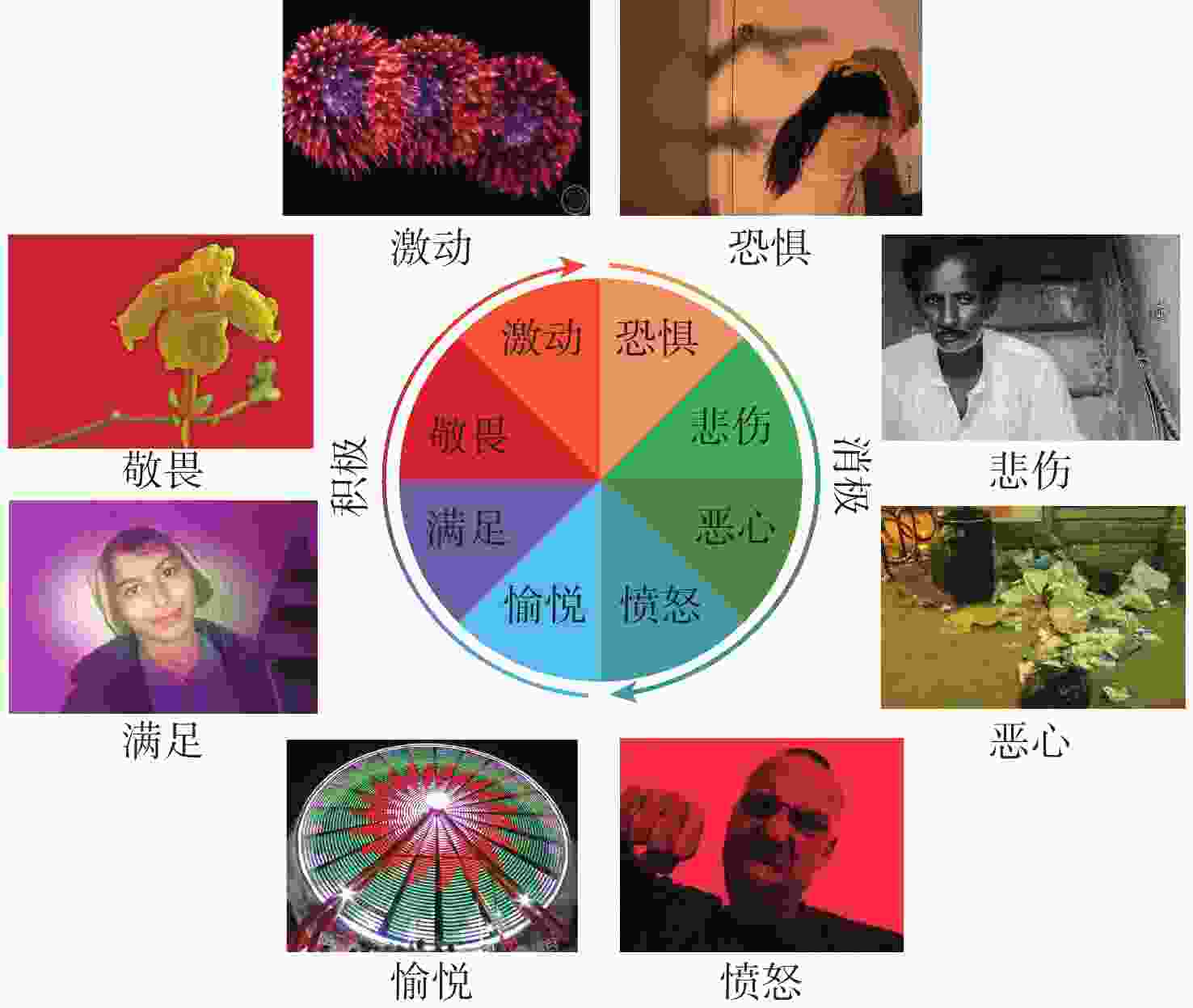

图像情感分析旨在分析和理解视觉内容所传达的情感,其挑战在于弥合潜在视觉特征与抽象情感间的情感鸿沟。现有的深度模型试图一次性通过直接在全局范围内学习有辨别力的高级情感表征来弥合鸿沟,但忽略了深度模型各层特征之间的层次关系,导致上下文特征间的关联缺失。为此,提出一种上下文层次交互网络(CHINet)来建立层次结构中的上下文信息和情感之间的相关性模型。该模型包含2个分支:自下而上的主分支直接在高级语义层次上学习全局情感表征,针对该分支的不同层次特征,通过构建浅层风格编码器和情感激活注意力机制来分别提取风格表示并定位潜在情感激活区域;所提取的特征被级联到金字塔结构作为自上而下分支,从而建模上下文层次相关性并为情感表示提供浅层视觉特征。通过全局和局部学习将低级风格属性和高级图像语义整合到一起。实验结果表明:所提模型在FI数据集上较同类方法(包括多层次特征融合方法和结合了局部情感区域的方法)提升了情感识别准确率。

Abstract:Image sentiment analysis aims to analyze the emotions conveyed by visual content. A key challenge in this field is to bridge the affective gap between latent visual features and abstract emotions. Existing deep learning models attempt to address this issue by directly learning discriminative high-level emotional representations globally at once but overlook the hierarchical relationship between features at each layer of the deep model, resulting in a lack of correlation between contextual features. Therefore, this paper proposed a context-hierarchical interaction network (CHINet) to model the correlation between contextual information and sentiment within the hierarchy. The model consists of two branches: a bottom-up branch, which first directly learns the global emotional representation at the high-level semantic level; then, for different feature level within the branch, it extracts the style representation and localizes potential emotion activation regions by shallow style encoder and emotion activation attention mechanism. The extracted features are then cascaded into a pyramid structure as top-down branches, modeling contextual hierarchical dependencies and providing shallow visual features for emotion representation. Finally, global and local learning integrate shallow image styles with high-level semantics. Experiments show that the proposed model improves emotion recognition accuracy on the FI dataset compared with related methods, including multi-level feature fusion methods and approaches incorporating local emotional regions.

-

表 1 图像情感数据集详细信息

Table 1. Details of image emotion datasets

数据集 积极极性数量 消极极性数量 合计 愉悦 敬畏 满足 激动 小记 愤怒 恶心 恐惧 悲伤 小记 Abstract[9] 25 15 63 36 139 3 18 36 32 89 228 ArtPhoto[9] 101 102 70 105 378 77 70 115 166 428 806 EmotionROI[14] 330 330 660 330 330 330 330 1 320 1 980 FI[1] 4 942 3 151 5 374 2 963 16 430 1 266 1 658 1 032 2 922 6 878 23 308 Twitter I[20] 769 500 1 269 表 2 FI上不同层的CNN情感分类准确率

Table 2. Emotion classification accuracy of CNN models with different layers on FI

模型 层 分类准确率/% c=2 c=8 AlexNet[16] 5 Conv + 2 fc 65.43 42.78 VGG-16[36] 13 Conv + 2 fc 81.35 63.84 VGG-19[36] 16 Conv + 2 fc 82.62 64.23 DenseNet-100 w/o fc[37] 99 Conv 83.77 64.76 DenseNet-100[37] 99 Conv + 2 fc 84.21 65.08 ResNet-18 w/o fc[34] 17 Conv 80.16 60.76 ResNet-18[34] 17 Conv + 2 fc 81.27 61.55 ResNet-50 w/o fc[34] 49 Conv 83.52 62.81 ResNet-50[34] 49 Conv + 2 fc 84.06 63.74 ResNet-152 w/o fc[34] 151 Conv 84.73 66.27 ResNet-152[34] 151 Conv + 2 fc 85.19 66.44 表 3 基于局部和全局学习的情感分类准确率

Table 3. Emotion classification accuracy based on local and global learning

模型 准确率/% 基线模型 85.19 CHINet w/o G 87.36 CHINet w/o L 88.41 CHINet G&L 89.74 表 4 FI数据集上二元情感极性分类准确率比较

Table 4. Classification accuracy comparison of binary emotion polarity on FI dataset

模型 网络 准确率/% 基准模型 AlexNet[16] 60.54 VGGNet[36] 70.64 ResNet[34] 72.22 Fine-tuned AlexNet[6] 72.43 Fine-tuned VGGNet[36] 83.05 Fine-tuned ResNet-50[34] 85.19 对比方法 PCNN (VGGNet)[20] 75.34 DeepSentiBank[21] 61.54 AR[29] 86.35 VSF[23] 88.11 MSRCA[30] 87.40 MLR[18] 87.87 本文模型 CHINet w/o G 87.36 CHINet w/o L 88.41 CHINet G&L 89.74 表 5 小规模数据集上的二元情感极性分类准确率比较

Table 5. Classification accuracy comparison of binary emotion polarity on small-scale datasets

% 模型 网络 ArtPhoto[9] Abstract[9] Twitter I[20] EmotionROI[14] Twitter I 5 Twitter I 4 Twitter I 3 手工特征 PAEF[13] 67.85 70.05 72.90 69.61 67.92 75.24 SentiBank 67.74 64.95 71.32 68.28 66.63 66.18 深度学习 DeepSentiBank[21] 68.73 71.19 76.35 70.15 71.25 70.11 PCNN (VGGNet)[20] 70.96 70.84 82.54 76.52 76.36 73.58 Fine-tuned VGG[36] 70.09 72.48 84.35 82.26 76.75 77.02 AR[29] 74.80 76.03 88.65 85.10 81.06 81.26 R-CNNGSR[31] 75.02 75.89 81.36 VSF[23] 81.62 81.82 83.10 83.17 MLR[18] 75.63 77.85 89.77 85.72 81.49 83.08 本文模型 CHINet w/o G 78.61 79.07 86.54 83.04 81.36 82.26 CHINet w/o L 81.12 81.15 89.51 82.95 82.17 82.47 CHINet G&L 83.27 82.33 90.52 86.43 84.37 83.53 表 6 FI数据集上多类别情感分类性能比较

Table 6. Comparison of multi-category emotion classification performance on FI dataset

模型 网络 准确率/% 基线模型 AlexNet[16] 49.54 VGGNet-19[36] 61.74 ResNet[34] 53.08 Inception[38] 60.12 Inception-Resnet[39] 62.77 对比模型 DeepSentiBank[21] 51.29 PDANet[40] 69.42 MKN[19] 63.92 CycleEmotionGAN[41] 66.79 Deep metric learning[42] 68.37 MldrNet[16] 67.24 OSSCM[22] 69.32 WSCNet[3] 70.07 VSF[23] 70.46 MSRCA[30] 69.05 MLR[18] 67.49 本文模型 CHINet w/o G 69.94 CHINet w/o L 70.32 CHINet G&L 71.56 -

[1] YOU Q Z, LUO J B, JIN H L, et al. Building a large scale dataset for image emotion recognition: the fine print and the benchmark[C]//Proceedings of the AAAI Conference on Artificial Intelligence. Palo Alto: AAAI Press, 2016, 308-314. [2] XU L W, WANG Z T, WU B, et al. MDAN: multi-level dependent attention network for visual emotion analysis[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2022: 9469-9478. [3] SHE D Y, YANG J F, CHENG M M, et al. WSCNet: weakly supervised coupled networks for visual sentiment classification and detection[J]. IEEE Transactions on Multimedia, 2020, 22(5): 1358-1371. doi: 10.1109/TMM.2019.2939744 [4] YANG H S, FAN Y Y, LV G Y, et al. Exploiting emotional concepts for image emotion recognition[J]. The Visual Computer, 2023, 39(5): 2177-2190. doi: 10.1007/s00371-022-02472-8 [5] YANG J Y, LI J, WANG X M, et al. Stimuli-aware visual emotion analysis[J]. IEEE Transactions on Image Processing, 2021, 30: 7432-7445. doi: 10.1109/TIP.2021.3106813 [6] LIANG Y, MAEDA K, OGAWA T, et al. Chain centre loss: a psychology inspired loss function for image sentiment analysis[J]. Neurocomputing, 2022, 495: 118-128. doi: 10.1016/j.neucom.2022.04.016 [7] 张浩, 李海鹏, 彭国琴, 等. 多层次特征融合表征的图像情感识别[J]. 计算机辅助设计与图形学学报, 2023, 35(10): 1566-1576.ZHANG H, LI H P, PENG G Q, et al. Image emotion recognition via fusion multi-level representations[J]. Journal of Computer-Aided Design & Computer Graphics, 2023, 35(10): 1566-1576(in Chinese). [8] ZHANG H M, XU M. Multiscale emotion representation learning for affective image recognition[J]. IEEE Transactions on Multimedia, 2022, 25: 2203-2212. [9] MACHAJDIK J, HANBURY A. Affective image classification using features inspired by psychology and art theory[C]//Proceedings of the 18th ACM International Conference on Multimedia. New York: ACM, 2010. [10] RAO T R, LI X X, XU M. Learning multi-level deep representations for image emotion classification[J]. Neural Processing Letters, 2020, 51(3): 2043-2061. doi: 10.1007/s11063-019-10033-9 [11] 詹明. 融合风格特征的抽象图像情感识别[D]. 吉林: 吉林大学, 2023.ZHAN M. Affective analysis of abstract images using style representation[D]. Jilin : Jilin University, 2023(in Chinese). [12] 尹朝. 基于内容生成与特征提取的图像情感识别模型研究[J]. 系统仿真技术, 2023, 19(2): 141-147. doi: 10.3969/j.issn.1673-1964.2023.02.008YIN C. Research on image emotion recognition model based on feature extraction and content generation[J]. System Simulation Technology, 2023, 19(2): 141-147(in Chinese). doi: 10.3969/j.issn.1673-1964.2023.02.008 [13] ZHAO S C, YAO X X, YANG J F, et al. Affective image content analysis: two decades review and new perspectives[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(10): 6729-6751. doi: 10.1109/TPAMI.2021.3094362 [14] PENG K C, CHEN T, SADOVNIK A, et al. A mixed bag of emotions: model, predict, and transfer emotion distributions[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2015: 860-868. [15] CAMPOS V, SALVADOR A, GIRO-I-NIETO X, et al. Diving deep into sentiment: understanding fine-tuned CNNs for visual sentiment prediction[C]//Proceedings of the 1st International Workshop on Affect & Sentiment in Multimedia. New York: ACM, 2015. [16] KRIZHEVSKY A, SUTSKEVER I, HINTON G E. ImageNet classification with deep convolutional neural networks[J]. Communications of the ACM, 2017, 60(6): 84-90. doi: 10.1145/3065386 [17] ZHU X G, LI L, ZHANG W G, et al. Dependency exploitation: a unified CNN-RNN approach for visual emotion recognition[C]//Proceedings of the 26th International Joint Conference on Artificial Intelligence. New York: ACM, 2017: 3595-3601. [18] ZHANG H, XU D, LUO G F, et al. Learning multi-level representations for affective image recognition[J]. Neural Computing and Applications, 2022, 34(16): 14107-14120. doi: 10.1007/s00521-022-07139-y [19] SHE D Y, SUN M, YANG J F. Learning discriminative sentiment representation from strongly- and weakly supervised CNNs[J]. ACM Transactions on Multimedia Computing, Communications, and Applications, 2019, 15(3s): 1-19. [20] YOU Q Z, LUO J B, JIN H L, et al. Robust image sentiment analysis using progressively trained and domain transferred deep networks[C]//Proceedings of the AAAI Conference on Artificial Intelligence. Palo Alto: AAAI Press, 2015, 29(1): 1-8. [21] CHEN T, BORTH D, DARRELL T, et al. DeepSentiBank: visual sentiment concept classification with deep convolutional neural networks[EB/OL]. (2014-10-30)[2023-06-01]. http://arxiv.org/abs/1410.8586. [22] ZHANG J, CHEN M, SUN H, et al. Object semantics sentiment correlation analysis enhanced image sentiment classification[J]. Knowledge-Based Systems, 2020, 191: 105245. doi: 10.1016/j.knosys.2019.105245 [23] YAMAMOTO T, TAKEUCHI S, NAKAZAWA A. Image emotion recognition using visual and semantic features reflecting emotional and similar objects[J]. IEICE Transactions on Information and Systems, 2021, 104(10): 1691-1701. [24] YANG J Y, GAO X B, LI L D, et al. SOLVER: scene-object interrelated visual emotion reasoning network[J]. IEEE Transactions on Image Processing, 2021, 30: 8686-8701. doi: 10.1109/TIP.2021.3118983 [25] DENG Z L, ZHU Q R, HE P, et al. A saliency detection and gram matrix transform-based convolutional neural network for image emotion classification[J]. Security and Communication Networks, 2021, 2021: 6854586. [26] 彭国琴. 基于深度学习的图像情感语义分析关键问题研究[D]. 昆明: 云南大学, 2021.PENG G Q. Research on key issues of image emotional semantic analysis based on deep learning[D]. Kunming: Yunnan University, 2021(in Chinese). [27] PENG K C, SADOVNIK A, GALLAGHER A, et al. Where do emotions come from? predicting the emotion stimuli map[C]//Proceedings of the IEEE International Conference on Image Processing. Piscataway: IEEE Press, 2016: 614-618. [28] SUN M, YANG J F, WANG K, et al. Discovering affective regions in deep convolutional neural networks for visual sentiment prediction[C]//Proceedings of the IEEE International Conference on Multimedia and Expo. Piscataway: IEEE Press, 2016: 1-6. [29] YANG J F, SHE D Y, SUN M, et al. Visual sentiment prediction based on automatic discovery of affective regions[J]. IEEE Transactions on Multimedia, 2018, 20(9): 2513-2525. doi: 10.1109/TMM.2018.2803520 [30] ZHANG J, LIU X Y, CHEN M, et al. Image sentiment classification via multi-level sentiment region correlation analysis[J]. Neurocomputing, 2022, 469: 221-233. doi: 10.1016/j.neucom.2021.10.062 [31] XIONG H T, LIU Q, SONG S Y, et al. Region-based convolutional neural network using group sparse regularization for image sentiment classification[J]. EURASIP Journal on Image and Video Processing, 2019, 2019(1): 30. doi: 10.1186/s13640-019-0433-8 [32] 申朕, 崔超然, 董桂鑫, 等. 基于深度多任务学习的图像美感与情感联合预测研究[J]. 软件学报, 2023, 34(5): 2494-2506.SHEN Z, CUI C R, DONG G X, et al. Unified image aesthetic and emotional prediction based on deep multi-task learning[J]. Journal of Software, 2023, 34(5): 2494-2506(in Chinese). [33] ZHANG H, LUO G F, YUE Y Y, et al. Affective image recognition with multi-attribute knowledge in deep neural networks[J]. Multimedia Tools and Applications, 2024, 83(6): 18353-18379. [34] HE K M, ZHANG X Y, REN S Q, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition . Piscataway: IEEE Press, 2016: 770-778. [35] 张浩, 徐丹. 基于深度学习的少数民族绘画情感分析方法[J]. 中国科学: 信息科学, 2019, 49(2): 204-215. doi: 10.1360/N112018-00249ZHANG H, XU D. Ethnic painting analysis based on deep learning[J]. Scientia Sinica (Informationis), 2019, 49(2): 204-215 (in Chinese). doi: 10.1360/N112018-00249 [36] SIMONYAN K, ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[EB/OL]. (2015-04-10)[2023-06-01]. http://arxiv.org/abs/1409.1556. [37] HUANG G, LIU Z, VAN DER MAATEN L, et al. Densely connected convolutional networks[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 2261-2269. [38] SZEGEDY C, VANHOUCKE V, IOFFE S, et al. Rethinking the inception architecture for computer vision[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 2818-2826. [39] SZEGEDY C, IOFFE S, VANHOUCKE V, et al. Inception-v4, inception-ResNet and the impact of residual connections on learning[C]//Proceedings of the AAAI Conference on Artificial Intelligence. Palo Alto: AAAI Press, 2017, 31(1): 1-7. [40] ZHAO S C, JIA Z Z, CHEN H, et al. PDANet: polarity-consistent deep attention network for fine-grained visual emotion regression[C]//Proceedings of the 27th ACM International Conference on Multimedia. New York: ACM, 2019. [41] SUN Z K, SARMA P, SETHARES W, et al. Learning relationships between text, audio, and video via deep canonical correlation for multimodal language analysis[C]//Proceedings of the AAAI Conference on Artificial Intelligence. Palo Alto: AAAI Press, 2020, 34(5): 8992-8999. [42] YAO X X, SHE D Y, ZHANG H W, et al. Adaptive deep metric learning for affective image retrieval and classification[J]. IEEE Transactions on Multimedia, 2020, 23: 1640-1653. [43] SELVARAJU R R, COGSWELL M, DAS A, et al. Grad-CAM: visual explanations from deep networks via gradient-based localization[J]. International Journal of Computer Vision, 2020, 128(2): 336-359. doi: 10.1007/s11263-019-01228-7 -

下载:

下载: