Multi-source remote sensing image classification based on wavelet transform and parallel attention

-

摘要:

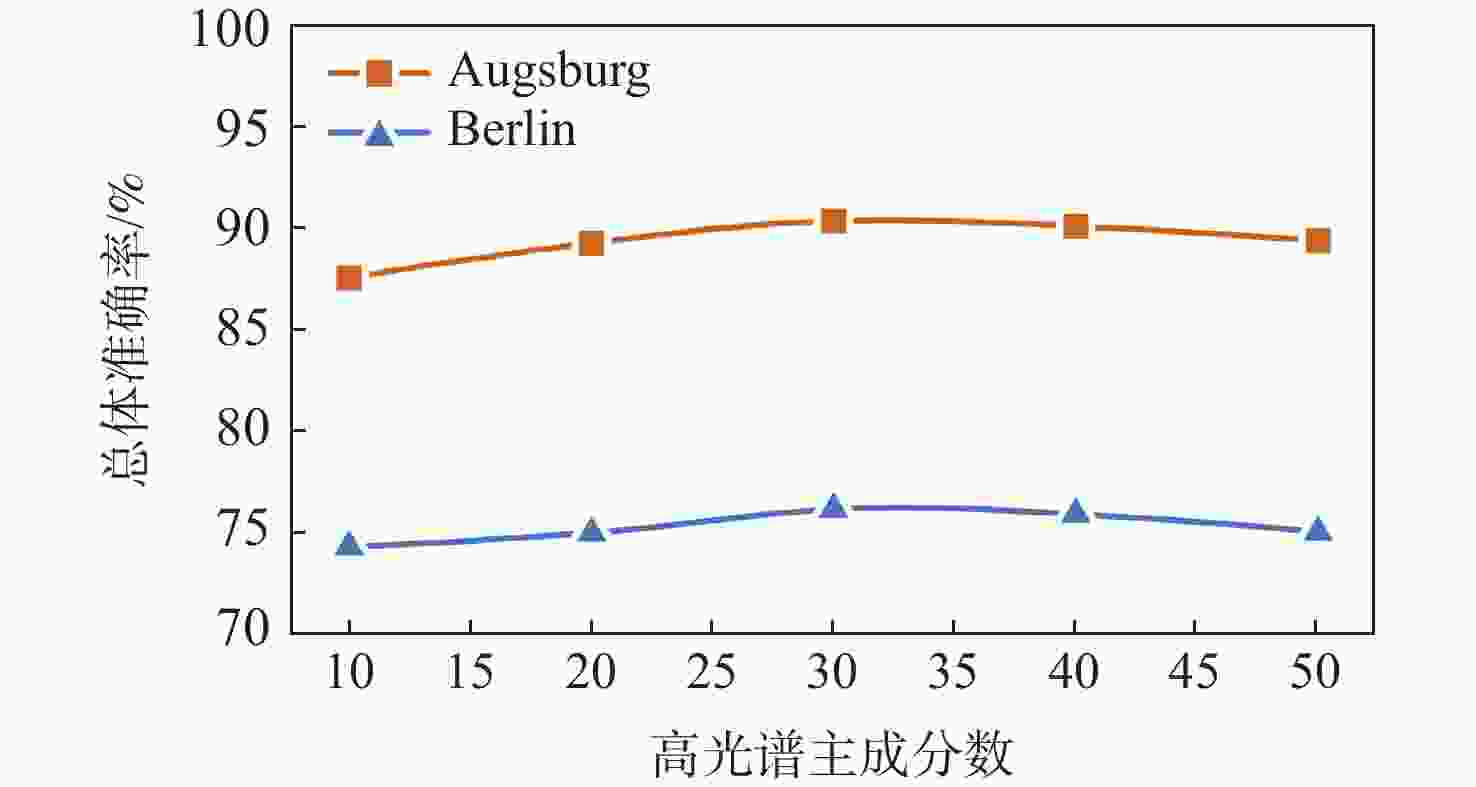

充分挖掘多源遥感图像数据特征的依赖关系,实现不同模态图像数据间的优势互补,已成为遥感领域的研究热点方向之一。现有的高光谱和合成孔径雷达(SAR)数据联合分类任务存在图像特征提取和特征表达不充分的问题,高频信息容易损失,不利于后续的分类任务,以及多源图像特征交互有限,多模态特征关联不紧密的关键难题。针对上述问题,围绕图像特征的鲁棒表达和多源特征的高效关联开展研究,提出了基于小波变换和平行注意力机制的多源遥感图像分类网络(WPANet)。基于小波变换的特征提取器可以充分利用频域分析技术,在可逆下采样的过程中充分捕捉粗/细粒度级别特征;基于平行注意力机制的特征融合器充分综合多模态遥感数据的一致性和差异性,完成强相关性特征的融合和生成,以提升分类准确度。在Augsburg和Berlin这2个真实多源遥感数据集上的实验表明:所提分类方法具有显著优势,总体准确率分别达到90.40%和76.23%,相比于深度特征交互网络(DFINet)等主流方法,在2个数据集上的总体准确率分别至少提升2.66%和12.22%。

Abstract:Exploring the dependency relationships of multi-source remote sensing image data features to leverage the complementary advantages between different modalities has become a prominent research direction in the field of remote sensing. Existing joint classification tasks of hyperspectral and synthetic aperture radar (SAR) data face two key challenges: insufficient feature extraction and representation in images, resulting in the loss of high-frequency information, which hinders subsequent classification tasks, and limited interaction among multi-source image features and weak correlation between multimodal features. To address these challenges, research was conducted on robust representation of image features and efficient correlation of multi-source features, and a multi-source remote sensing image classification method based on wavelet transform and parallel attention mechanism (WPANet) was proposed. The feature extractor based on wavelet transform could effectively utilize frequency domain analysis techniques, capturing coarse- and fine-grained features during the process of reversible downsampling. The feature fuser based on the parallel attention mechanism comprehensively integrated the consistency and differences of multimodal remote sensing data, accomplishing the fusion and generation of highly correlated features to enhance classification accuracy. Experimental results on two real multi-source remote sensing datasets demonstrate the significant advantages of the proposed classification method. The overall accuracy on the Augsburg and Berlin datasets reaches 90.40% and 76.23%, respectively, with at least a 2.66% and 12.22% improvement in overall accuracy compared to mainstream methods like depthwise feature interaction network (DFINet) on the two datasets.

-

表 1 Augsburg数据集对比实验结果

Table 1. Comparative experimental results for Augsburg dataset

% 方法 分类准确度 总体

准确率平均

准确度Kappa

系数森林

(146/13345 )住宅区

(264/30065 )工业区

(21/3830 )低矮植物

(248/26543 )配额地

(52/523)商业区

(7/1632 )水域

(23/1502 )SVM[15] 90.55 89.81 23.03 83.73 34.23 9.71 45.92 81.60 53.82 73.17 LBP-ELM[16] 93.65 86.81 35.12 83.21 49.33 7.94 44.99 81.47 57.29 73.41 TBCNN[17] 94.77 95.01 71.17 85.33 56.41 15.14 22.30 87.11 62.87 81.69 ContextCNN[18] 94.57 97.25 51.46 86.25 56.02 13.68 21.57 87.24 60.11 81.82 DFINet[19] 95.38 95.84 69.79 86.65 64.05 13.86 28.47 88.06 64.86 82.98 WPANet 94.81 93.66 67.52 95.49 50.10 19.91 44.81 90.40 66.61 86.28 注:加粗数值表示最优结果。括号中数值为训练/测试样本数。 表 2 Berlin数据集对比实验结果

Table 2. Comparative experimental results for Berlin dataset

% 方法 分类准确度 总体

准确率平均

准确度Kappa

系数森林

(443/54484 )住宅区

(423/268219 )工业区

(499/19067 )低矮植物

(376/58906 )土壤

(331/17095 )配额地

(280/13025 )商业区

(298/24526 )水域

(170/6502 )SVM[15] 50.08 61.07 30.68 84.29 87.30 54.00 26.61 65.40 60.48 57.43 45.36 LBP-ELM[16] 86.17 36.95 45.46 84.09 89.72 0.00 0.35 50.17 48.32 49.25 34.65 TBCNN[17] 76.47 62.42 43.22 78.82 76.33 73.44 49.76 82.28 65.81 67.84 41.79 ContextCNN[18] 77.22 63.69 61.44 73.77 87.22 82.88 31.13 74.24 66.31 68.95 54.03 DFINet[19] 68.95 67.52 43.42 81.77 75.58 80.05 40.94 79.87 67.93 67.26 55.22 WPANet 69.35 81.06 62.22 85.17 90.47 61.21 25.08 80.04 76.23 69.32 64.36 注:加粗数值表示最优结果。括号中数值为训练/测试样本数。 表 3 小波变换特征提取器和平行注意力特征融合器消融实验结果

Table 3. Results of ablation experiments with wavelet transform feature extractor and parallel attention-based feature fuser

网络结构 总体准确率/% Augsburg Berlin 卷积特征提取网络 87.27 73.86 小波变换特征提取器 89.71 75.86 平行注意力特征融合器 88.96 75.17 小波变换特征提取器+平行注意力特征融合器 90.40 76.23 注:加粗数值表示最优结果。 -

[1] WANG C, ZHANG L, WEI W, et al. Dynamic super-pixel normalization for robust hyperspectral image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5505713. [2] BIOUCAS-DIAS J M, PLAZA A, CAMPS-VALLS G, et al. Hyperspectral remote sensing data analysis and future challenges[J]. IEEE Geoscience and Remote Sensing Magazine, 2013, 1(2): 6-36. doi: 10.1109/MGRS.2013.2244672 [3] MOREIRA A, PRATS-IRAOLA P, YOUNIS M, et al. A tutorial on synthetic aperture radar[J]. IEEE Geoscience and Remote Sensing Magazine, 2013, 1(1): 6-43. doi: 10.1109/MGRS.2013.2248301 [4] MAN Q X, DONG P L, GUO H D. Pixel-and feature-level fusion of hyperspectral and lidar data for urban land-use classification[J]. International Journal of Remote Sensing, 2015, 36(6): 1618-1644. doi: 10.1080/01431161.2015.1015657 [5] HU J L, GHAMISI P, SCHMITT A, et al. Object based fusion of polarimetric SAR and hyperspectral imaging for land use classification[C]//2016 8th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing. Piscataway: IEEE Press, 2016: 1-5. [6] CHEN Y S, LI C Y, GHAMISI P, et al. Deep fusion of remote sensing data for accurate classification[J]. IEEE Geoscience and Remote Sensing Letters, 2017, 14(8): 1253-1257. doi: 10.1109/LGRS.2017.2704625 [7] HONG D F, GAO L R, HANG R L, et al. Deep encoder-decoder networks for classification of hyperspectral and LiDAR data[J]. IEEE Geoscience and Remote Sensing Letters, 2020, 19: 5500205. [8] ZHAO X D, TAO R, LI W, et al. Joint classification of hyperspectral and LiDAR data using hierarchical random walk and deep CNN architecture[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 58(10): 7355-7370. doi: 10.1109/TGRS.2020.2982064 [9] FENG M, GAO F, FANG J, et al. Hyperspectral and lidar data classification based on linear self-attention[C]//2021 IEEE International Geoscience and Remote Sensing Symposium. Piscataway: IEEE Press, 2021: 2401-2404. [10] LI W, GAO Y H, ZHANG M M, et al. Asymmetric feature fusion network for hyperspectral and SAR image classification[J]. IEEE Transactions on Neural Networks and Learning Systems, 2023, 34(10): 8057-8070. doi: 10.1109/TNNLS.2022.3149394 [11] HU J, SHEN L, SUN G. Squeeze-and-excitation networks[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 7132-7141. [12] KURZ F, ROSENBAUM D, LEITLOFF J, et al. Real time camera system for disaster and traffic monitoring[C]//Proceedings of International Conference on SMPR 2011. Tehran: University of Tehran, 2011: 1-6. [13] BAUMGARTNER A, GEGE P, KÖHLER C, et al. Characterisation methods for the hyperspectral sensor HySpex at DLR’s calibration home base[C]//Sensors, Systems, and Next-Generation Satellites XVI. Bellingham: SPIE, 201285331H. [14] HONG D F, HU J L, YAO J, et al. Multimodal remote sensing benchmark datasets for land cover classification with a shared and specific feature learning model[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2021, 178: 68-80. doi: 10.1016/j.isprsjprs.2021.05.011 [15] MELGANI F, BRUZZONE L. Classification of hyperspectral remote sensing images with support vector machines[J]. IEEE Transactions on Geoscience and Remote Sensing, 2004, 42(8): 1778-1790. doi: 10.1109/TGRS.2004.831865 [16] LI W, CHEN C, SU H J, et al. Local binary patterns and extreme learning machine for hyperspectral imagery classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2015, 53(7): 3681-3693. doi: 10.1109/TGRS.2014.2381602 [17] XU X D, LI W, RAN Q, et al. Multisource remote sensing data classification based on convolutional neural network[J]. IEEE Transactions on Geoscience and Remote Sensing, 2018, 56(2): 937-949. doi: 10.1109/TGRS.2017.2756851 [18] LEE H, KWON H. Going deeper with contextual CNN for hyperspectral image classification[J]. IEEE Transactions on Image Processing, 2017, 26(10): 4843-4855. doi: 10.1109/TIP.2017.2725580 [19] GAO Y H, LI W, ZHANG M M, et al. Hyperspectral and multispectral classification for coastal wetland using depthwise feature interaction network[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 60: 5512615. -

下载:

下载: